5 Probability

5.1 Basic Concepts

5.1.1 Random Experiments and Sample Space

At the beginning of our considerations, we introduce the concept of an experiment. By this, we understand a process that is conducted under precisely defined conditions and produces a result that can be observed.

Every experiment that has at least two possible outcomes, we call a random experiment. Or to put it another way: any experiment that, if repeated under the same conditions, does not always yield the same result, is not a random experiment.

The essence of a random experiment is the fundamental unpredictability of its outcome. This raises the immediate question: how can one develop a mathematical theory for processes that exhibit this very property of unpredictability?

The answer lies in repetition. When a random experiment is repeated many times, patterns begin to emerge gradually that eventually solidify into laws, laws that are not only of extraordinary beauty and aesthetics but also allow for predictions of breathtaking accuracy.

To mathematically describe a random experiment, we need some basic concepts. We begin with:

Definition 5.1 (Sample Space) The sample space \(\Omega\) of an experiment is the set of all possible outcomes an experiment can have.

Another basic concept is that of an event.

5.1.2 Events

Definition 5.2 (Event) An event is a statement about a possible outcome of a random experiment. Thus, every event corresponds to a subset of the sample space, namely, the subset of outcomes for which the statement is true.

It is customary to denote events by Latin capital letters, preferably from the first half of the alphabet, e.g., \(A\), \(B\), etc.

To better understand the concept of an event, let us look at an example.

Exercise 5.3 A random experiment consists of throwing a regular six-sided die, whose sides are numbered \(1,2,\ldots,6\), exactly one time. Represent the event {I roll an even number} as a subset of the sample space \(\Omega\).

Solution: The sample space is \(\Omega=\{1,2,\ldots,6\}\). The event {even number} corresponds to the subset \(A=\{2,4,6\}\). If one rolls a number (e.g., the number 2) that is contained in the subset \(A\), then the event \(A\) has occurred. □

In this task, the sample space was relatively small, so we could easily express \(\Omega\) as a list.

Very often, however, the sample space contains an enormous number of elements, so that it is no longer sensible or even possible to list \(\Omega\). But this is not usually necessary, as we are mostly interested in the size or cardinality of this set, which we denote by \(|\Omega|\).

Exercise 5.4 In a lottery, tickets numbered \(1,2,\ldots,45\) are offered for drawing. The drawing consists of selecting a sample of \(6\) tickets (consecutively and without replacement). Determine the extent of the sample space and that subset which corresponds to the event {jackpot}.

Solution: The sample space \(\Omega\) is the set of all lists (ordered sets) of length six that can be formed from the numbers \(1,2,\ldots,45\).

How many elements does \(\Omega\) contain? It is simple: for the first drawing, we have 45 options, for the second only 44, because the drawing is conducted without replacement. Hence, for each subsequent drawing, the number of possibilities diminishes by one. In total, we have: \[ \begin{gathered} |\Omega|=45\cdot 44\cdot 43\cdot 42 \cdot 41 \cdot 40=5\,864\,443\,200 \end{gathered} \] possibilities. In this lottery, a bet consists of indicating a set of 6 numbers. This bet is then the jackpot if the numbers of the bet, aside from the order, match the results of the drawing. The event \(A=\{\textit{jackpot}\}\) therefore corresponds to the subset \(A\subseteq\Omega\), which consists of all permutations of the drawn betting sequence. This subset \(A\) contains \[ \begin{gathered} |A|=6\cdot 5\cdot 4\cdot 3\cdot 2\cdot 1=6! = 720 \end{gathered} \] elements. We see that, in comparison to \(\Omega\), \(A\) is a very small set and therefore we expect that the event \(A\) will be observed rather seldom in the experiment. □

Random Variables

In many cases, events are defined by random variables. A random variable \(X\) is a random number observable during the random experiment. Mathematically, a random variable \(X\) is a function (assigning rule) that assigns a number \(X(\omega)\) to every possible outcome \(\omega\in\Omega\) of the random experiment. Any statement about the random variable is an event. The great advantage of random variables is that we can calculate with them. We can add them, multiply them, etc.

Exercise 5.5 A die with the numbers \(1,2,\ldots,6\) is thrown twice. Let \(X\) be the number rolled on the first throw, \(Y\) the number on the second throw. Determine the subset of the sample space corresponding to the event \(\{X+Y<4\}\).

Solution: In this experiment, the set of outcomes \(\Omega\) consists of all pairs \((a,b)\) that we can form from the numbers \(1,2,\ldots,6\): \[ \begin{gathered} \Omega=\{(1,1),(1,2),\ldots,(6,6)\},\quad |\Omega|=36. \end{gathered} \] Then, we have \[ \begin{gathered} \{X+Y<4\}=\{(1,1),(1,2),(2,1)\},\quad|\{X+Y<4\}|=3. \end{gathered} \] □

5.1.3 Combination of Events

We have already established that events are subsets of the set of outcomes \(\Omega\). Since every set contains the empty set \(\varnothing\) and itself as a subset, that is, \(\varnothing\subset \Omega\) and \(\Omega\subset \Omega\), \(\varnothing\) and \(\Omega\) are also events, albeit of a special kind:

\(\varnothing\) is the impossible event, because the experiment necessarily must have a result that lies within \(\Omega\).

\(\Omega\) is the certain event, because \(\Omega\) contains all possible outcomes.

As we know, new sets which are themselves events can be formed from subsets of \(\Omega\) through simple set operations.

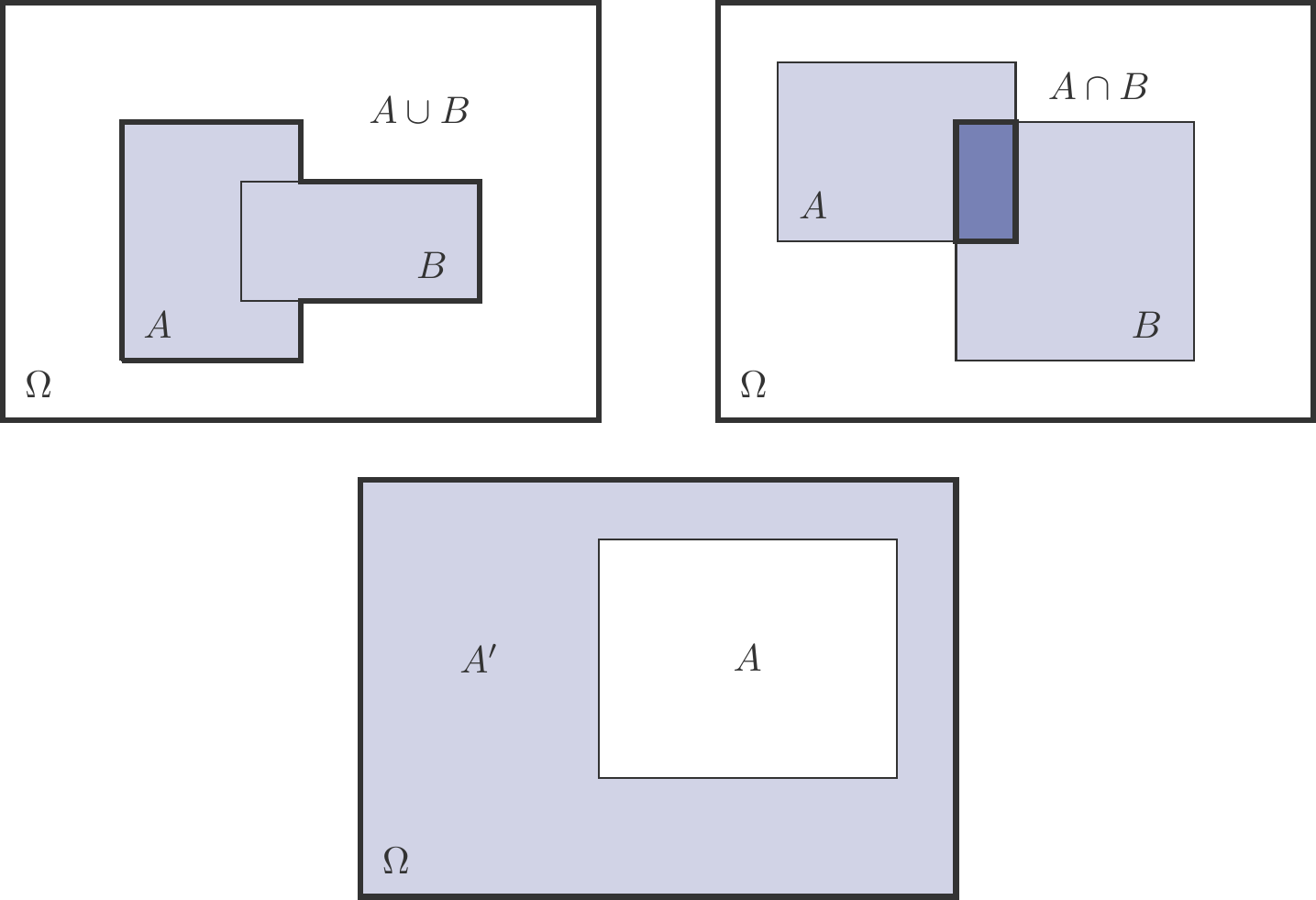

The most important set operations and their interpretations are (see Figure 5.1):

\(A\cup B\) – the union of two sets

This is the set of all elements that belong to \(A\) or \(B\). Interpreted as a random event: Event \(A\) or \(B\) occurs.\(A\cap B\) – the intersection of two sets

This is the set of all elements that belong to both \(A\) and \(B\). Interpreted as a random event: Both events \(A\) and B occur.\(A'\) – the complement of a set

This is the set of all elements that do not belong to \(A\). As a random event, the complement is interpreted as: the event \(A\) does not occur.

An important special case occurs when \(A\cap B=\varnothing\). In this case, we say that the two events \(A\) and \(B\) are incompatible; they exclude each other. In short, \(A\cap B\) cannot occur.

For example, consider the experiment of rolling a die once and let \[ \begin{gathered} A=\{\text{even number}\}=\{2,4,6\},\quad B=\{\text{odd number}\}=\{1,3,5\}. \end{gathered} \] Then, \(A\cap B=\varnothing\) because it is not possible when rolling a die once to obtain a number that is both even and odd at the same time.

In a gambling game, a series of \(n\) individual games is played, with each individual game having the possible outcomes win (\(G\)) and loss (\(V\)). Therefore, the set of outcomes \(\Omega\) for the series of games consists of all lists of length \(n\) that can be formed from the two letters \(G\) and \(V\). It contains \(|\Omega|=2^n\) lists.

This is easy to understand: each list has \(n\) positions, and for each position we have two options for filling it, namely \(G\) or \(V\). Hence, the number of different lists is \(2^n\).

Let \(S_n\) be the frequency (number) of occurrences of the letter \(G\) in the outcome \(\omega\in \Omega\). Then \(S_n\) is a random variable with possible values \(0,1,\ldots,n\). This random variable indicates the number of wins in the series of games.

The event {at least \(k\) wins} can be symbolically represented by \(\{S_n\ge k\}\). It corresponds to the set of all lists \(\omega\in \Omega\) that contain the letter \(G\) at least \(k\) times.

Exercise 5.7 In a gambling game, a series of \(5\) individual games is played, each of which has the possible outcomes {win} (\(G\)) and {loss} (\(V\)). What is the proportion (percentage) of series in which exactly one win occurs?

Solution: \(\Omega\) is the set of all 5-lists that we can form from the letters \(G\) and \(V\), thus \(|\Omega|=2^5=32\). Let \(A=\{\mathit{exactly~one~win}\}\).

Then \(|A|=5\), because only these 5 lists in \(\Omega\) contain exactly one letter \(G\): \[ \begin{gathered} \mathit{GVVVV},\quad\mathit{VGVVV},\quad \mathit{VVGVV},\quad \mathit{VVVGV}, \quad\mathit{VVVVG}. \end{gathered} \] Therefore, the proportion is \(5/2^5=0.15625\), which is 15.625%. □

The percentage just calculated is our first example of a probability.

5.1.4 Probabilities

Suppose a random experiment with the outcome set \(\Omega\) is given. It is said that an event \(A\) has occurred if, after conducting the random experiment, the realized outcome \(\omega\) is contained in the subset \(A\subseteq\Omega\). This means nothing else than that the statement corresponding to the event \(A\) about the outcome is accurate.

It is fundamentally unpredictable before a random experiment is performed whether a particular event will occur or not. Nonetheless, it is usually the case that after numerous repetitions of the random experiment, some events have occurred more frequently than others. Therefore, it makes sense to use the statistical frequency of occurrence of events as a basis for constructing the concept of the probability of an event.

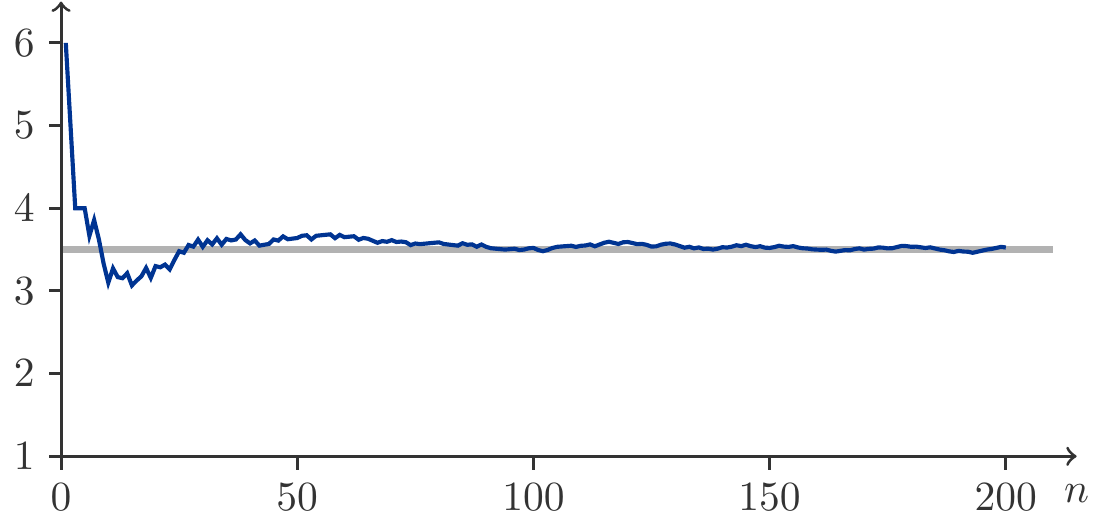

Definition 5.8 Let \(f_n(A)\) be the relative frequency at which the event \(A\) occurs in a series of \(n\) repetitions of the random experiment. The idea now is that this relative frequency approaches a fixed percentage as the number of repetitions increases: \[ \begin{gathered} \lim_{n\to\infty} f_n(A)=:P(A). \end{gathered} \tag{5.1}\] This limit \(P(A)\) of the relative frequencies is called the probability of \(A\).

This intuitive explanation of the concept of probability just mentioned is not a definition in the mathematical sense. It is merely an assumption that is supposed to make it possible to empirically grasp the theoretical concept of probability. One could refer to this assumption as the empirical law of large numbers. The usefulness of this assumption has been demonstrated by the fact that the mathematical probability theory (stochastic) based on it can make valid statements about real-world applications.

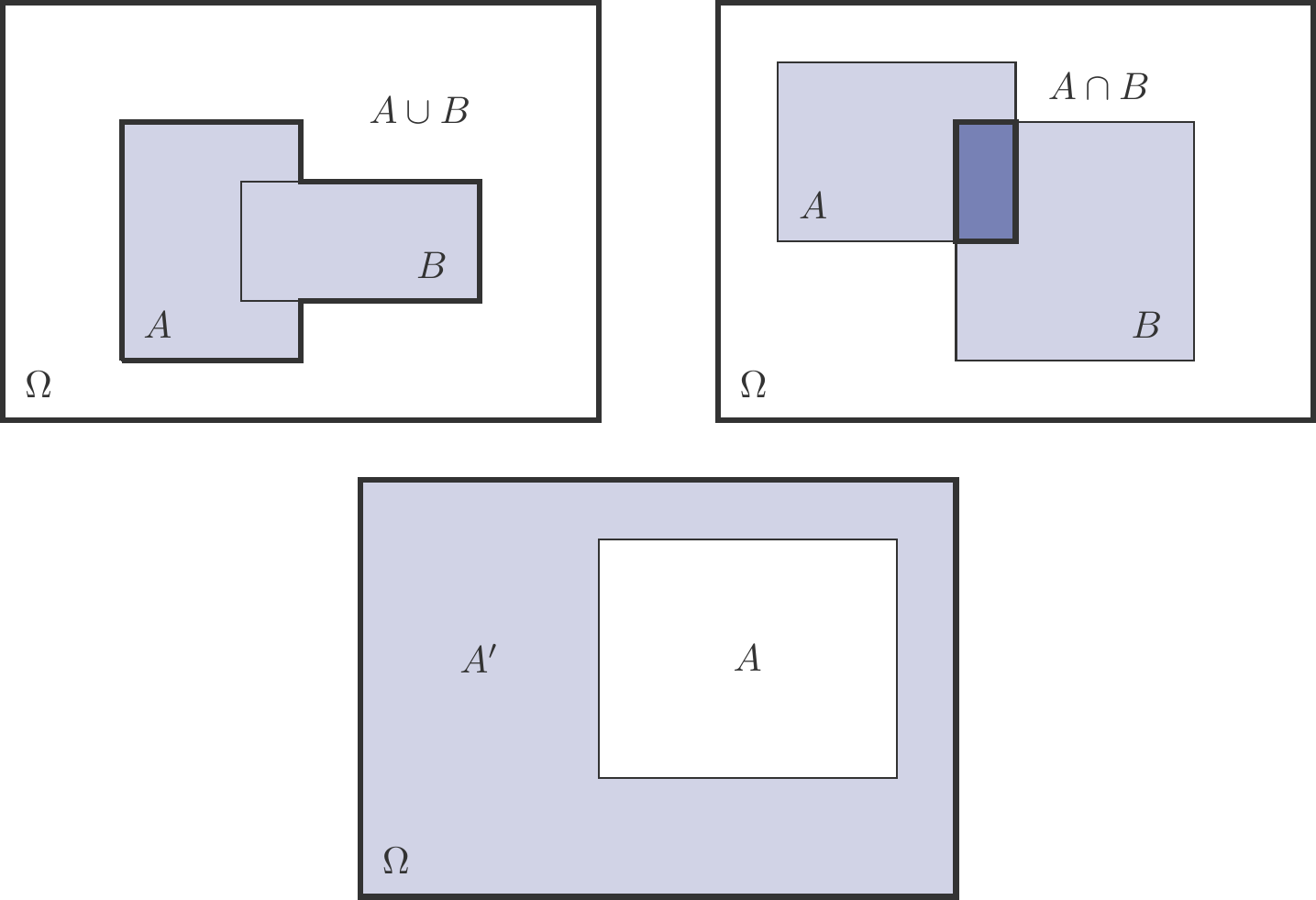

For Figure 5.2, 200 coin tosses were simulated. We see how the relative frequency \(f_n(A)\) of the event \(A=\{\mathit{heads}\}\) approaches the value \(1/2\).

From the intuitive explanation of the concept of probability, we derive the fundamental properties of probabilities:

Theorem 5.9 (Properties of Probabilities) Let \(\Omega\) be the outcome set of a random experiment, and let \(A\), \(B\), \(C\),… be observable events. Then the following laws apply to the probabilities of the events:

\(0\le P(A) \le 1\).

\(P(\Omega)=1\): The certain event has a probability of 1.

\(P(\varnothing)=0\): The impossible event has a probability of 0.

If \(A\cap B=\varnothing\), meaning the events \(A\) and \(B\) are mutually exclusive, then the addition law applies: \[ \begin{gathered} P(A\cup B)=P(A)+P(B). \end{gathered} \tag{5.2}\]

The Law of Addition is the most important rule for calculating probabilities.

The numerical determination of probabilities using the Law of Large Numbers (Definition 5.8) often involves significant effort. However, there are situations where we can achieve our goal more easily. To do so, two conditions must be satisfied.

Definition 5.10 (Classical Probability Concept) If

\(\Omega\) is a finite set, and

all outcomes of the experiment are equally likely,

then for all events \(A\subset \Omega\): \[ \begin{gathered} P(A)=\frac{|A|}{|\Omega|}=\frac{\text{Number of favorable cases}}{\text{Number of possible cases}}\,. \end{gathered} \tag{5.3}\]

It can be shown that the classical concept of probability possesses all the properties required by Theorem 5.9. However, whether the conditions of Definition 5.10 are met must be checked on a case-by-case basis. The hypothesis of equal likelihood in particular is often critically viewed. Nevertheless, statistics provide methods that allow testing this hypothesis.

If all conditions are met, then determining probabilities with Definition 5.10 is in principle simple. We just need to determine the sizes (cardinalities) of the set of possible outcomes \(\Omega\) and the events \(A\), \(B\), etc. we are interested in by counting.

Exercise 5.11 A die is thrown once. What is the probability of getting an even number?

Solution: We have already established: \[ \begin{gathered} \Omega=\{1,2,3,4,5,6\},\quad |\Omega|=6;\qquad A=\{2,4,6\},\quad |A|=3. \end{gathered} \] From (5.3) it follows that: \[ \begin{gathered} P(A)=\frac{|A|}{|\Omega|}=\frac{3}{6}=\frac{1}{2}. \end{gathered} \] This result is valid under the assumption that the die is fair, i.e., the hypothesis of equal likelihood is fulfilled.

We could also approach the problem experimentally by invoking the empirical Law of Large Numbers (5.1). To do this, it would be necessary to throw the die very often, perhaps a few thousand times, and count how often an even number appears. The relative frequency should be close to \(0.5\) with an increasing number of trials. We expect a graph very similar to Figure 5.2. □

Exercise 5.12 What is the probability of hitting the jackpot in the lottery 6 out of 45?

Solution: In the solution of Exercise 5.4 we have already found: \[ \begin{aligned} |\Omega| & =45\cdot 44\cdot 43\cdot 42\cdot 41\cdot 40=5\,864\,443\,200,\\ |A| & =6!=720,\\ \implies P(A) =\frac{|A|}{|\Omega|} & =\frac{720}{5864443200} =0.0000001227738\,. \end{aligned} \] □

The interpretation of probability as the long-term expected proportion of realizations enables an estimation of the number of realizations in a large series of trials.

Exercise 5.13 In Austria, it is estimated that 8.5% of insurance claims reported to insurance companies are fraudulent or manipulated across all sectors. How many fraud cases per year should an insurance company expect to deal with if it processes 150,000 claims per year?

Solution: Let \(N=150000\) be the number of insurance claims per year and \(p=0.085\) the percentage of fraudulent insurance claims. The expected frequency of fraud cases per year is \[ \begin{gathered} Np=150\,000\cdot 0.085= 12750. \end{gathered} \] □

Exercise 5.14 A company is giving away a vacation trip to one of its 8000 customers through a scratch game. In this scratch game, exactly three correct squares out of ten must be scratched off to win. How many winners of the vacation trip should the company expect?

Solution: Let’s assume that the participants scratch off the fields completely at random. This means all \(10\cdot 9\cdot 8=720\) possibilities of scratching off three fields in a row have the same probability \(1/720\). There are \(3\cdot 2\cdot 1=6\) ways to hit the three correct fields in a different order. Thus, when scratching a scratch card, the event \(A=\{\textit{Winning the vacation trip}\}\) has the probability \[ \begin{gathered} P(A)=\frac{6}{720}=0.00833. \end{gathered} \] With \(N=8000\) participants, one would therefore expect \(N\cdot P(A)= 8000\cdot 0.00833=66.67\approx 67\) winners. □

5.1.5 A Generalization of the Addition Law

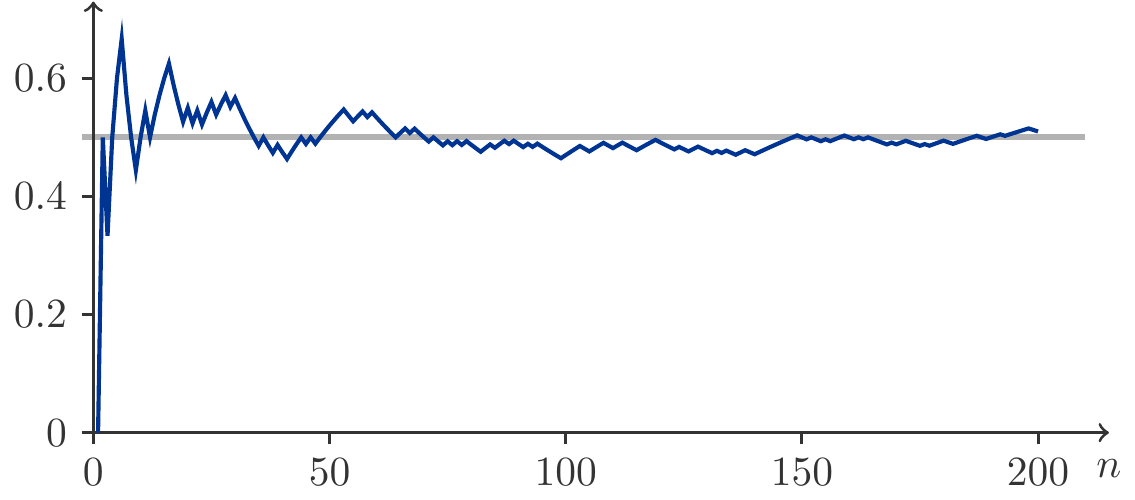

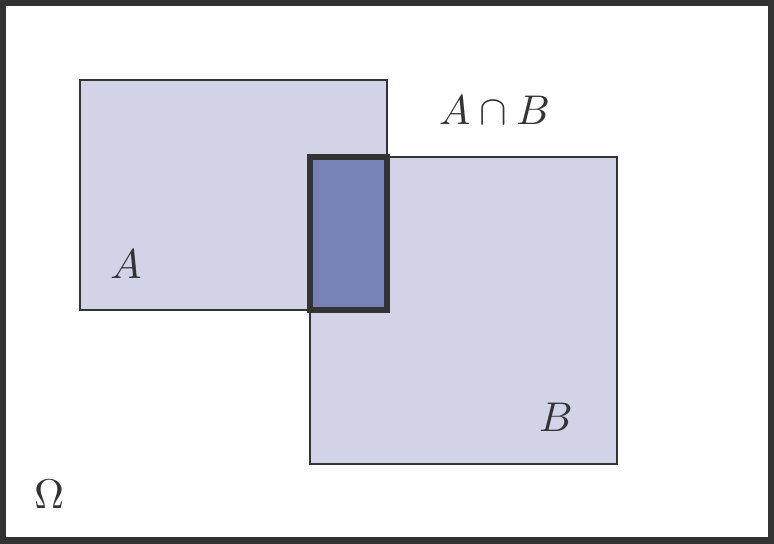

The addition law (5.2) allows us to calculate the probability of \(A\) or \(B\) occurring if the events \(A\) and \(B\) are incompatible, meaning \(A\cap B=\varnothing\). However, if \(A\) and \(B\) are not incompatible, then a more general formula applies: \[ \begin{gathered} P(A\cup B)=P(A)+P(B)-P(A\cap B). \end{gathered} \tag{5.4}\] An intuitive explanation is provided by looking at Figure 5.3.

To determine the probability that \(A\) or \(B\) occurs, we cannot simply add the probabilities of \(A\) and \(B\) because generally \(A\cap B\ne \varnothing\). Then the outcomes that belong to \(A\) and \(B\) would be counted twice, so we must subtract \(P(A\cap B)\) once. This is exactly the statement of (5.4).

Exercise 5.15 (Solving a mathematical problem) Student \(A\) solves a mathematical problem with a probability of \(3/4\), while student \(B\) does so with only a probability of \(1/2\). The probability that both solve the problem is \(3/8\).

What is the probability that the problem gets solved?

Solution: From the given information: \(P(A)=\dfrac{3}{4},\; P(B)=\dfrac{1}{2}, \;P(A \cap B)=\dfrac{3}{8}\).

If the problem is solved, it means: \(A\) solves the problem, or \(B\) manages to solve it, or both succeed.

We are thus looking for \(P(A\cup B)\). Using the addition law (5.4): \[ \begin{aligned} P(A\cup B)&=P(A)+P(B)-P(A\cap B)\\ &=\frac{3}{4}+\frac{1}{2}-\frac{3}{8}=\frac{7}{8}=0.875\,. \end{aligned} \]

5.1.6 Random Variables

Probabilities for events expressed by random variables can be calculated in a similar way. The law of large numbers (5.1) is available to us, just like the classical concept of probability (5.3).

Exercise 5.16 A die is rolled twice. Let \(X\) be the result of the first roll and \(Y\) the result of the second roll. What is the probability that \(P(X+Y<4)\)?

Solution: Since all \(6\cdot 6=36\) possible outcomes (eye pairs) of \((X,Y)\) are considered equally likely, each has the probability \(1/36\). There are exactly 3 outcomes whose sum of eyes is less than 4, namely \[ \begin{gathered} \{X+Y<4\}=\{(1,1),(1,2),(2,1)\}. \end{gathered} \] Therefore, \[ \begin{gathered} P(X+Y<4)=\frac{3}{36}=0.0833\,. \end{gathered} \]

Exercise 5.17 A die is rolled twice. Let \(X\) be the result of the first roll and \(Y\) the result of the second roll. What is the probability that \(P(X-Y=0)\)?

Solution: The sample space \(\Omega\) is the set of all 36 pairs \((a,b)\) where \(a,b=1,\ldots 6\). The event \(\{X-Y=0\}\) can only occur if \(X=Y\). There are exactly 6 possibilities: \[ \begin{gathered} \{X=Y\}=\{(1,1),(2,2),(3,3),(4,4),(5,5),(6,6)\}. \end{gathered} \] Therefore, \[ \begin{gathered} P(X-Y=0)=\frac{6}{36}\approx 0.1667\,. \end{gathered} \] □

Theorem 5.18 (Theorem of the Complementary Event) Let \(A\) be an event, then it follows: \[ \begin{gathered} P(A')=1-P(A). \end{gathered} \tag{5.5}\]

Justification: The event \(A\) and its complement \(A'\) are certainly incompatible, i.e., \(A\cap A'=\varnothing\). On the other hand, their union constitutes the sample space \(\Omega\), so \(\Omega=A\cup A'\). Thus, from the addition rule (5.2): \[ \begin{gathered} P(\Omega)=1=P(A)+P(A')\implies P(A')=1-P(A). \end{gathered} \] □

We consider a game of chance consisting of a series of individual games, each with possible outcomes \(G\) (win) and \(V\) (loss). Such a game is called symmetric if, in each individual game, winning and losing are equally probable and if the results of one game do not influence the results of another. Under these conditions, all \(2^n\) possible lists of results have the same probability \(1/2^n\).

Exercise 5.19 A player participates in a symmetric game of chance and plays 10 times. What is the probability that they will win at least once?

Solution: Let \(S_{10}\) be the number of wins in a series of 10 games. What is \(P(S_{10}\ge 1)\)?

To determine this, we consider the complement of \(\{S_{10}\ge 1\}\). What is the opposite of {at least one win in 10 games}?

It’s simple: \(\{S_{10}\ge 1\}'=\) {no single game was won out of 10 games}!

Hence \(\{S_{10}\ge 1\}'=\{S_{10}=0\}\) and therefore, by (5.5): \[ \begin{gathered} P(S_{10}\ge 1)=1-P(S_{10}=0). \end{gathered} \] However, the probability on the right-hand side is easy to determine because the underlying event corresponds to only a single outcome, namely losing all 10 games. This happens with probability \(1/2^{10}\). It follows that \[ \begin{gathered} P(S_{10}\ge 1)=1-\frac{1}{2^{10}}=0.9990234\,. \end{gathered} \] □

Exercise 5.20 A player participates in a symmetric game of chance. Let \(W\) be the waiting time (number of individual games) until the first win. Calculate \(P(W\le 8)\).

Solution: Again, we argue with the complement. It is \[ \begin{gathered} P(W\le 8)=1-P(W>8). \end{gathered} \] But if the event \(\{W>8\}\) has occurred, then we know something about the outcome of the first 8 games: \(W\) can only be \(>8\) if not a single game was won out of the first 8 games. Otherwise, \(W\le 8\). In other words: \[ \begin{gathered} P(W\le 8)=1-P(W>8)=1-P(S_8=0)=1-\frac{1}{2^8}=0.9961\,. \end{gathered} \]

Exercise 5.21 (A Paradox) What is the probability that in a group of 10 people at least two have a birthday on the same day (regardless of the year)?

Solution: We represent a birthday by a natural number between 1 and 365. That is, 1 corresponds to January 1st, and so on. The result set \(\Omega\) is the set of all possible lists of 10 numbers from \(1,2,\ldots,365\). And of course, birthdays can occur multiple times. It could be that all 10 people have their birthday on January 1st. The cardinality of \(\Omega\) is therefore: \[ \begin{gathered} |\Omega|=\underbrace{365\cdot 365\cdots 365}_{\text{10 times}}=365^{10}=4.2\cdot 10^{25}. \end{gathered} \] Let \(A\) be the event that at least two people have their birthday on the same day. What is \(A'\) then?

\(A'\) is the event that all 10 birthdays are different! The cardinality of \(A'\) is easy to find.

We form 10-item lists, where we have 365 possibilities for the first place, only 364 for the second place (since the birthdays must be different), and so on: \[ \begin{gathered} |A'|=365\cdot 364\cdot 363\cdots 356 = 3.7\cdot 10^{25}. \end{gathered} \] And thus we obtain: \[ \begin{gathered} P(A)=1-\frac{3.7\cdot 10^{25}}{4.2\cdot 10^{25}}\simeq 0.12\,. \end{gathered} \] This probability is surprisingly high. It becomes even clearer when we consider a group of 50 people. In this case, the probability that at least two people have their birthday on the same day is, \[ \begin{gathered} P(A)=1-\frac{3.9\cdot 10^{126}}{1.3\cdot 10^{128}}\simeq 0.97\,. \end{gathered} \] Thus, it is very likely that among 50 people, at least two will share the same birthday. Or in other words: it is very unlikely that all birthdays are different among 50 people. □

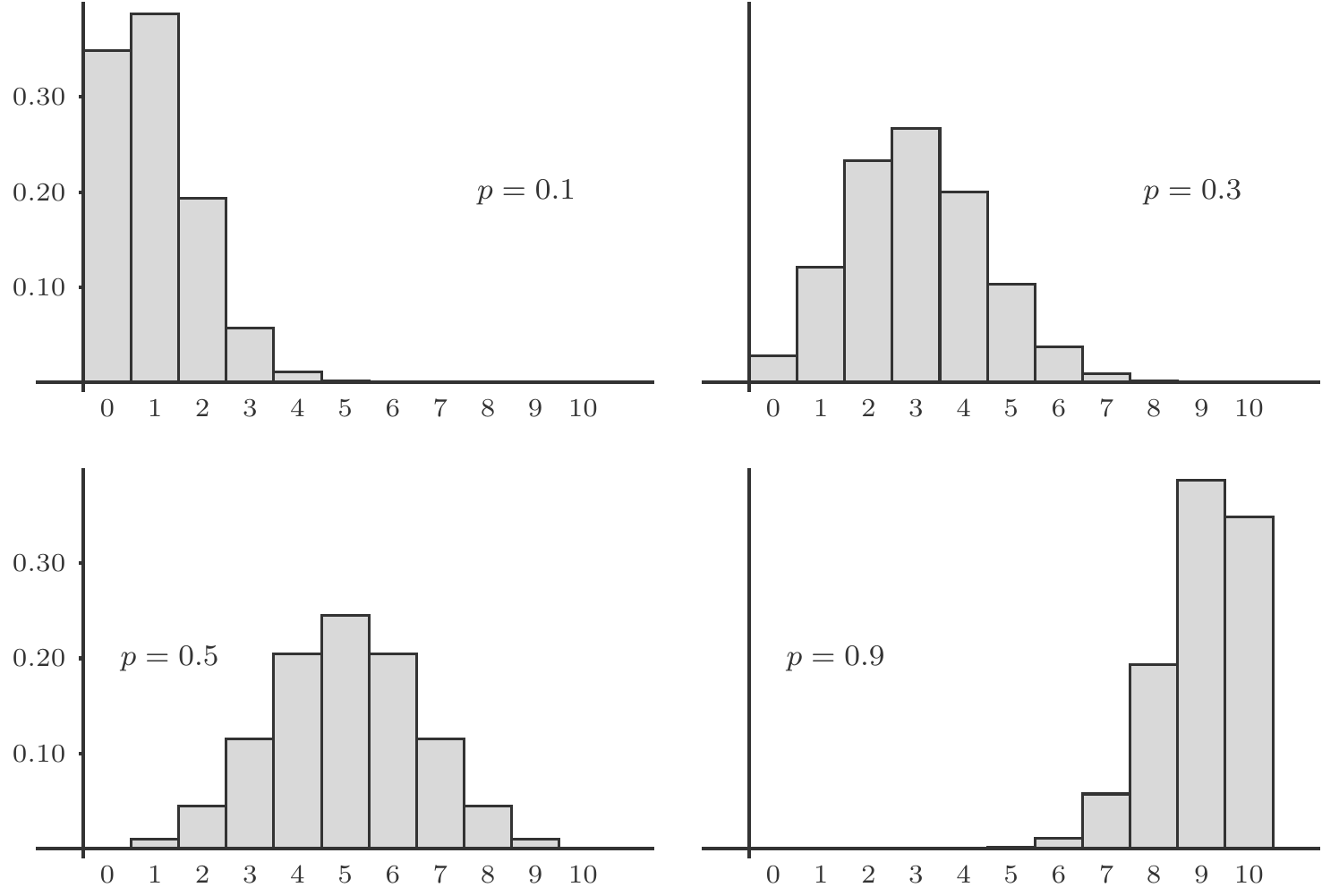

5.1.7 Discrete Distributions

All the random variables we have studied so far are discrete random variables. By this we mean that the set of their possible values is countable. Either this value set \(\mathcal S\) was finite, or it had no more elements than there are natural numbers.

In Exercise 5.16: \[ \begin{gathered} X\in\mathcal S_X,Y\in\mathcal S_Y,\quad \mathcal S_X,\mathcal S_Y=\{1,2,3,4,5,6\},\\ Z=X+Y\in \mathcal S_{Z}=\{2,3,4,\ldots,12\}. \end{gathered} \] In Exercise 5.17: \[ \begin{gathered} X\in\mathcal S_X,Y\in\mathcal S_Y,\quad \mathcal S_X,\mathcal S_Y=\{1,2,3,4,5,6\},\\ Z=X-Y\in\mathcal S_Z=\{-5,-4,-3,\ldots,3,4,5\}. \end{gathered} \] In Exercise 5.19: \[ \begin{gathered} S_{10}\in\mathcal S=\{0,1,2,\ldots,10\}. \end{gathered} \] In Exercise 5.20 the range was infinite for the first time: \[ \begin{gathered} W\in\mathcal S=\{1,2,3,\ldots\}=\mathbb N. \end{gathered} \] For all these and many other examples, it is easily possible to specify a function \(f_X(x)=P(X=x)\) for all values of \(x\in \mathcal S\). This function is called the probability function of the random variable \(X\). We can represent it by functional expressions or in the form of value tables.

For example Exercise 5.16, here \(Z=X+Y\): \[ \begin{gathered} f_X(x)=f_Y(x)=\frac{1}{6},\quad x=1,2,\ldots 6\\ \begin{array}{c|ccccccccccc} z & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & 11 & 12\\ \hline\\[-10pt] f_Z(z) & \frac{1}{36} &\frac{2}{36} & \frac{3}{36} & \frac{4}{36} & \frac{5}{36} & \frac{6}{36} & \frac{5}{36} & \frac{4}{36} & \frac{3}{36} & \frac{2}{36} & \frac{1}{36} \end{array}\ \end{gathered} \tag{5.6}\] Besides the probability function, we also need the concept of the distribution function \(F(x)\) of a random variable. It is defined by: \[ \begin{gathered} F(x)=P(X\le x)=\sum_{u\le x}f(u). \end{gathered} \tag{5.7}\] This is the cumulative sum of the values of the probability function. Unlike \(f(x)\), the distribution function \(F(x)\) is defined for all real numbers \(x\). It allows us to answer interesting questions directly.

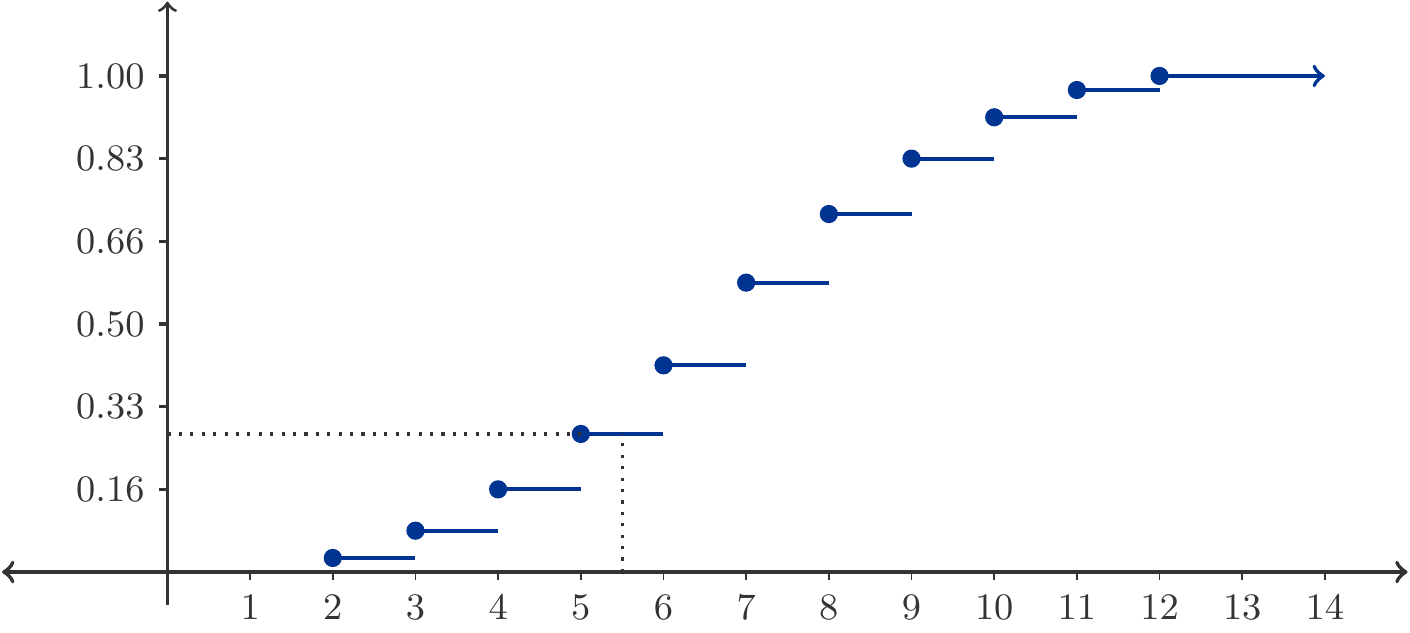

Let’s take, for example, the random variable \(Z=X+Y\), whose probability function is given in (5.6). From it we calculate: \[ \begin{aligned} F_Z(2)&=P(Z\le 2)=P(Z=2)=\frac{1}{36}\\[4pt] F_Z(3)&=P(Z\le 3)= P(Z=2)+P(Z=3)=\frac{3}{36}\\[4pt] F_Z(4)&=P(Z\le 4)=P(Z=2)+\ldots+P(Z=4)=\frac{6}{36}\\[4pt] &\ldots\\ F_Z(12)&=P(Z\le 12)=P(Z=2)+\ldots+P(Z=12)=1\ \end{aligned} \tag{5.8}\] We can represent \(F_Z(z)\) more clearly in tabular form: \[ \begin{gathered} \begin{array}{c|ccccccccccc} z & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & 11 & 12\\ \hline\\[-10pt] f_Z(z) & \frac{1}{36} &\frac{2}{36} & \frac{3}{36} & \frac{4}{36} & \frac{5}{36} & \frac{6}{36} & \frac{5}{36} & \frac{4}{36} & \frac{3}{36} & \frac{2}{36} & \frac{1}{36} \\[4pt] F_Z(z) & \frac{1}{36} & \frac{3}{36} & \frac{6}{36} & \frac{10}{36} & \frac{15}{36} & \frac{21}{36} & \frac{26}{36} & \frac{30}{36} & \frac{33}{36} & \frac{35}{36} & 1 \end{array} \end{gathered} \] Now, we said earlier, the distribution function \(F(x)\) is defined for all real numbers. This property is not as clear in the tabular representation (5.8). In fact, the distribution function of a discrete random variable is a step function. Hence, (5.8) is actually as follows (values as rounded floating-point numbers): \[ \begin{gathered} F_Z(z)=P(Z\le z)=\left\{\begin{array}{cl} 0.000 & \text{for } z<2\\[4pt] 0.028 & 2\le z < 3\\[4pt] 0.083 & 3\le z < 4\\[4pt] 0.167 & 4\le z < 5\\[4pt] 0.278 & 5\le z < 6\\ \vdots &\\ 0.972 & 11\le z < 12\\[4pt] 1.000 & z\ge 12 \end{array} \right. \end{gathered} \tag{5.9}\] This function is illustrated in Figure 5.4.

This figure shows us that a discrete distribution function exhibits jumps at those points \(x\) for which \(f(x)>0\). Between the jumps, the distribution function is constant.

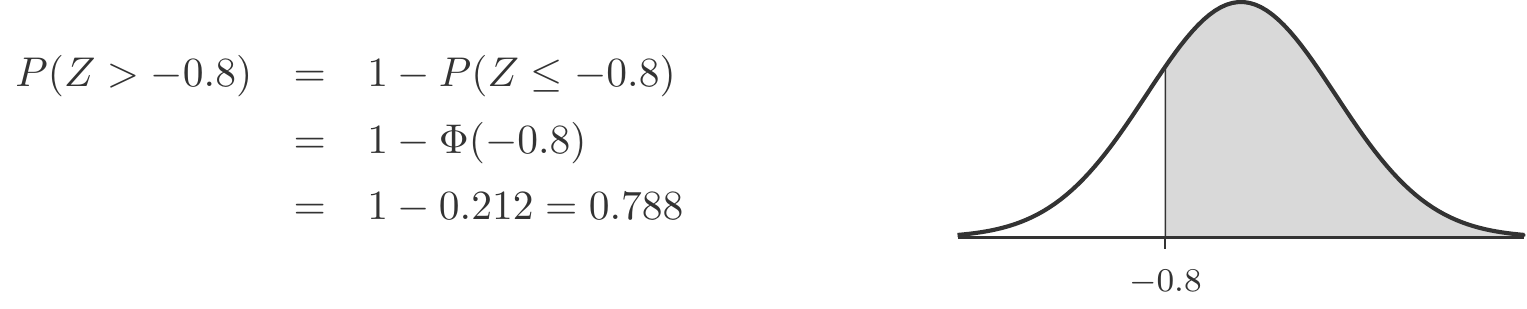

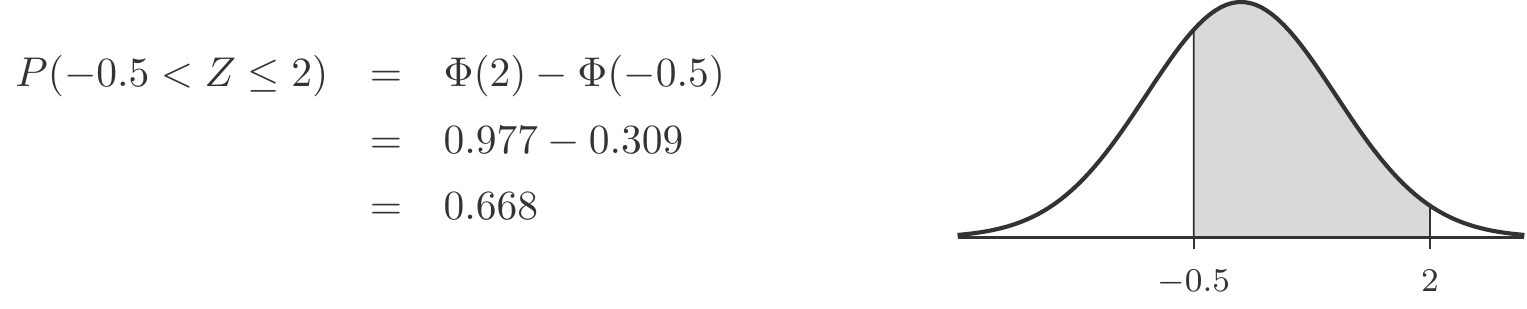

For example, we can read: \[ \begin{gathered} F_Z(5.5)=P(Z\le 5.5)=P(Z\le 5)=\frac{10}{36}\approx 0.278 \end{gathered} \] The distribution function condenses important information: \[ \begin{aligned} %% {alignat*}{2} P(X>a)&=1-P(X\le a)&=&\;1-F(a),\\[4pt] P(a<X\le b)&=P(X\le b)-P(X\le a)&=&\;F(b)-F(a). \end{aligned} \] These properties also hold under much more general conditions, so we summarize in the form of a theorem:

Theorem 5.22 (Characteristic of the Distribution Function)

The distribution function \(F(x)\) is defined for all real numbers.

\(0\le F(x)\le 1\).

\(F(x)\) is monotonic increasing.

\(\lim_{x\to-\infty}F(x)=0\), \(\lim_{x\to\infty}F(x)=1\).

\(P(X\le a)=F(a)\).

\(P(X>a)=1-F(a)\).

\(P(a<X\le b)=F(b)-F(a)\).

Exercise 5.23 Let \(N\) be the number of machine failures per day in an offset printing company. The probability function of \(N\) has been estimated from past collected data: \[ \begin{gathered} \begin{array}{c|cccccc} n & 0 & 1 & 2 & 3 & 4 & 5\\ \hline\\[-10pt] P(N=n) & 0.224 & 0.336 & 0.252 & 0.126 & 0.047 & 0.015 \end{array} \end{gathered} \] More than 5 failures per day have never been observed.

Determine the distribution function of \(N\).

What is the probability of more than 3 failures per day?

Solution: The cumulative distribution function \(F(n)=P(N\le n)\) is obtained from the cumulative values of the probability function: \[ \begin{gathered} \begin{array}{c|cccccc} n & 0 & 1 & 2 & 3 & 4 & 5\\ \hline\\[-10pt] P(N=n) & 0.224 & 0.336 & 0.252 & 0.126 & 0.047 & 0.015\\[3pt] P(N\le n)& 0.224 & 0.560 & 0.812 & 0.938 & 0.985 & 1.000 \end{array} \end{gathered} \] The probability of observing more than 3 failures in one day is: \[ \begin{gathered} P(N>3)=1-P(N\le 3)=1-0.938 = 0.062\,. \end{gathered} \] □

5.1.8 Continuous Distributions

Discrete random variables take on values in a finite or countably infinite set. They often express count results, such as the number of games won, the number of customers waiting in line at an airport check-in counter, the number of machine failures per day, the number of children per family, etc.

There is also another class of random variables that assume values in an interval. They typically arise not through counting, but through measurements that result in very specific measured values. Here are some examples:

- Waiting times for customers, service life of products, etc.

- Distances, lengths, weights, etc.

- Returns on financial investments

and much more.

The value \(P(t)\) of a portfolio at time \(t\) is expressed in units of currency and is strictly speaking a discrete quantity. However, since the range of values for \(P(t)\) is usually very large relative to the smallest currency unit (e.g., one Euro cent), \(P(t)\) will be approximately treated as a continuous quantity.

Events that we express using continuous random variables are no longer point events, like \(\{X=5\}\), but intervals on the number line.

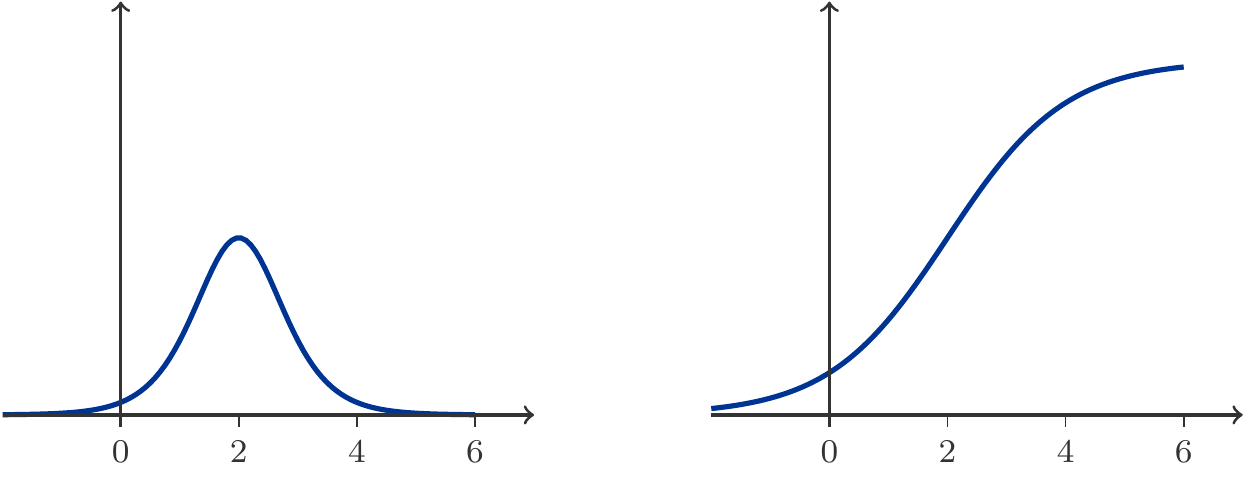

To assign probabilities to such intervals, we need the concept of a density function.

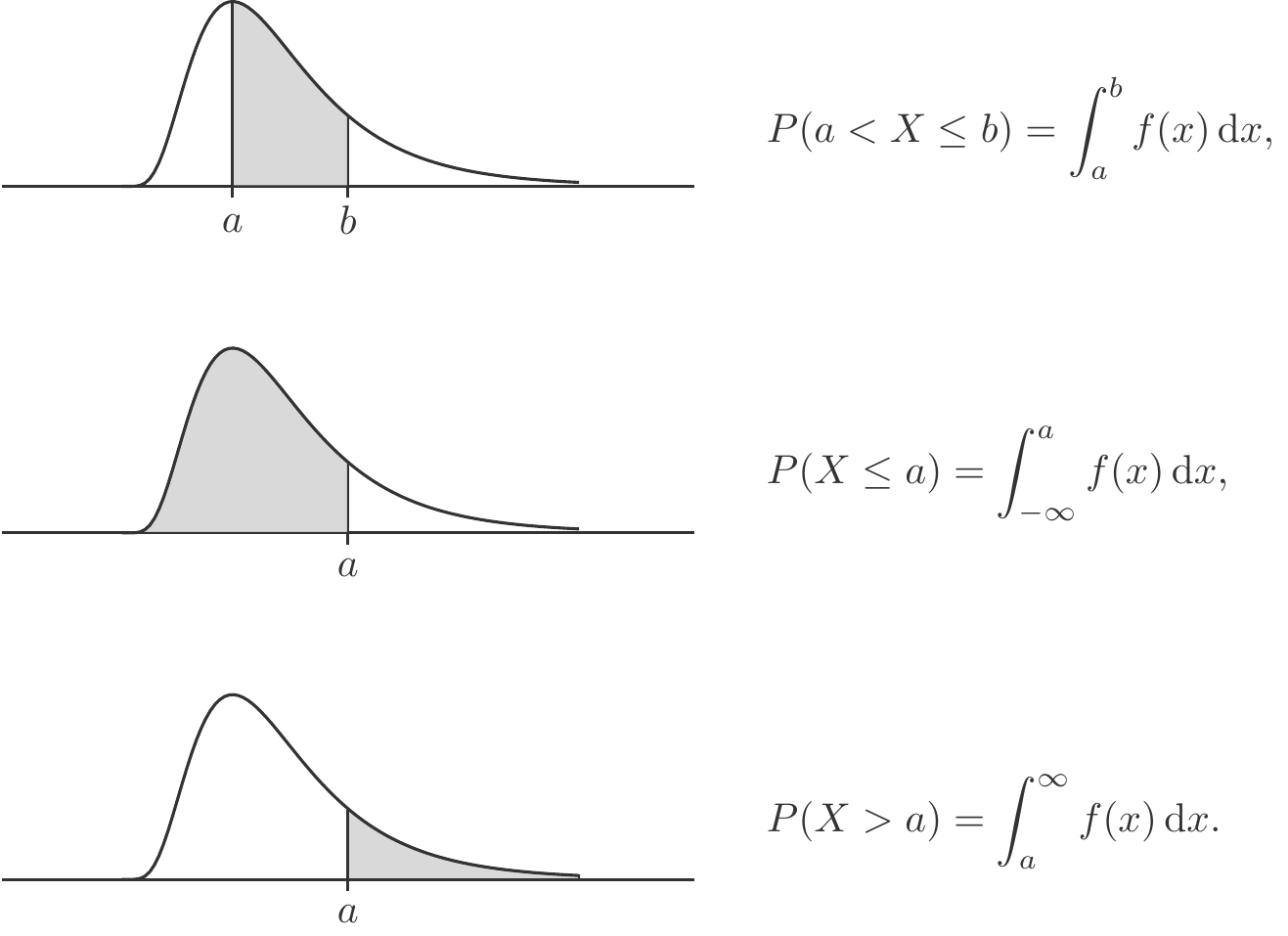

The density of a continuous random variable \(X\) is a continuous function \(f(x)\) with \[ \begin{gathered} f(x)\ge 0,\qquad \int_{-\infty}^\infty f(x)\,\mathrm{d}x = 1. \end{gathered} \] The last requirement states (see Chapter 4) that the area under the density must be equal to 1. In fact, it is areas under \(f(x)\) that represent probabilities. In particular, we have: \[ \begin{gathered} F(a)=P(X\le a)=\int_{-\infty}^af(x)\,\mathrm{d}x. \end{gathered} \] The function \(F(x)\) is called the distribution function of \(X\), just as in the case of discrete random variables. It possesses all the properties formulated in Theorem 5.22. In particular, we have: \[ \begin{aligned} P(X>a)&=\int_a^\infty f(x)\,\mathrm{d}x=1-F(a),\\[5pt] P(a<X\le b)&= \int_a^bf(x)\,\mathrm{d}x=F(b)-F(a). \end{aligned} \] Figure 5.5 illustrates these relationships.

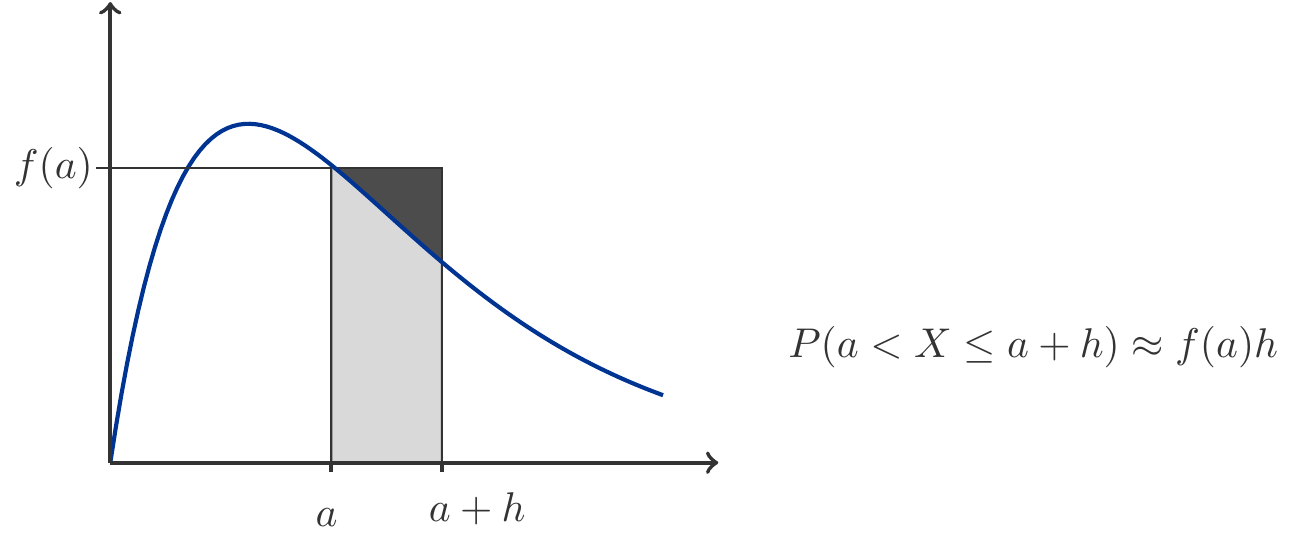

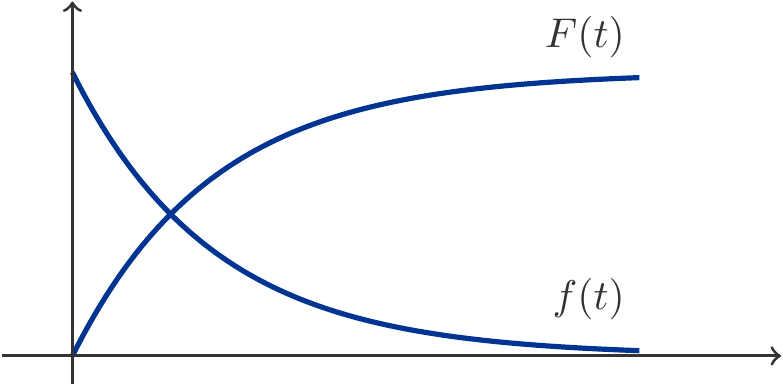

Furthermore, the cumulative distribution function of a continuous random variable is differentiable at all points where the density \(f(x)\) is continuous, and it holds that: \[ \begin{gathered} f(x)=F'(x). \end{gathered} \tag{5.10}\] It is important to emphasize that the function values of the density function \(f(x)\) are not probabilities. However, for very small values of \(h>0\) (principle of local linearization, Chapter 3, see also Figure 5.6), it approximately holds that: \[ \begin{gathered} P(x< X\le x+h)\approx f(x) h. \end{gathered} \tag{5.11}\]

This has another important consequence that gives rise to another characteristic property of continuous random variables. When we let \(h\to 0\) and if \(a\) is not a discontinuity point (jump point) of the density, then \[ \begin{gathered} \lim_{h\to 0} P(a<X\le a+h)=P(X=a)=0. \end{gathered} \tag{5.12}\] This property is very peculiar, yet typical for continuous random variables. Although we have demanded in Theorem 5.9 that the impossible event be assigned probability zero, it is clear that the converse of this statement is not correct. Boris Gnedenko (1912–1995), an eminent Russian probabilist, tried to explain this fact to his students as follows: we must distinguish between the theoretical impossibility of an event (e.g., rolling a 7 with a 6-sided die), and practical impossibility. Let’s assume that \(X\) is the lifespan of an energy-saving light bulb. Obviously, \(X\) takes its values in the interval \([0,\infty)\). It is theoretically possible that \(X\) lasts 1000 hours. But in practice, this is impossible, hence \(P(X=1000)=0\).

An intuitive argument that supports the formal aspect (5.12) would be this: The statement \(\{X=1000\}\) implies, among other things, that the value of the random variable is not 1000.00000000001 nor 999.999999999999. So, if we make a bet on the event \(\{X=1000\}\), then we would lose this bet if the value of the random variable \(X\) differs by an extremely small, practically immeasurable amount from 1000. Since this will almost always be the case, we will always lose the bet. This is precisely what the equation \(P(X=1000)=0\) means.

The Exponential Distribution is one of the most important continuous distributions. We say that a random variable \(T\) is exponentially distributed, when its density is given by: \[ \begin{gathered} f(t)=\left\{\begin{array}{cl} 0 & t<0\\ \lambda e^{-\lambda t} & t\ge 0 \end{array}\right.,\qquad \lambda >0 \end{gathered} \tag{5.13}\] The parameter \(\lambda\) is called the event rate, and this term also hints at the most important applications of the Exponential Distribution. It is commonly used to model time intervals \(T\) between random events. These events can be:

Arrivals of customers in the broadest sense, e.g., passengers at an airport, customers in a bank;

Failures of technical equipment: The time between two failures of a machine, its up-time, is often exponentially distributed.

The time intervals between emissions of \(\alpha\)-particles by a nucleus: this was even the original application of the Exponential Distribution in the early 20th century.

The distribution function is easily determined using the methods that we learned in Chapter 4: \[ \begin{gathered} P(T\le t)=F(t)=\int_0^t\lambda e^{-\lambda s}\,\mathrm{d}s=-e^{-\lambda s}\bigg|_0^t=1-e^{-\lambda t},\qquad t\ge 0. \end{gathered} \] The density possesses a discontinuity (jump) at \(t=0\). The distribution function has a corner at this point and is not differentiable there. However, for all \(t>0\) we have \(F'(t)=f(t)\), as the reader should verify.

The event rate \(\lambda\) is to be interpreted as follows: its value tells us, how closely events follow each other. The larger \(\lambda\), the smaller is the average distance between events, the more closely they follow one another. The smaller \(\lambda\), the less densely the events follow each other, their average distances are correspondingly larger.

Exercise 5.25 (Hospital Management) Studies in the United States have shown that the length of stay (in days) of patients in intensive care units (ICUs) can be very well approximated by an exponential distribution with \(\lambda=0.2564\). This corresponds to an average length of stay of 3.9 days.

What percentage of patients have to spend more than 10 days in an ICU?

What critical length of stay is exceeded by 1% of the patients?

Solution:

(a) Let \(T\) be the length of stay. \[

\begin{aligned}

P(T>10)&=1 - P(T\le 10)\\

&=1 -(1-e^{-10\cdot 0.2564})=e^{-2.564}=

0.076996 \approx 0.077\,.

\end{aligned}

\] About 7.7% of patients stay longer than 10 days in the ICU.

(b) We are looking for a time span \(t\) such that \(P(T>t)=0.01\): \[ \begin{gathered} P(T>t)=e^{-0.2564 t}=0.01\\ \implies t=-\frac{\ln(0.01)}{0.2564} =17.961 \approx 18\text{ days}. \end{gathered} \] □

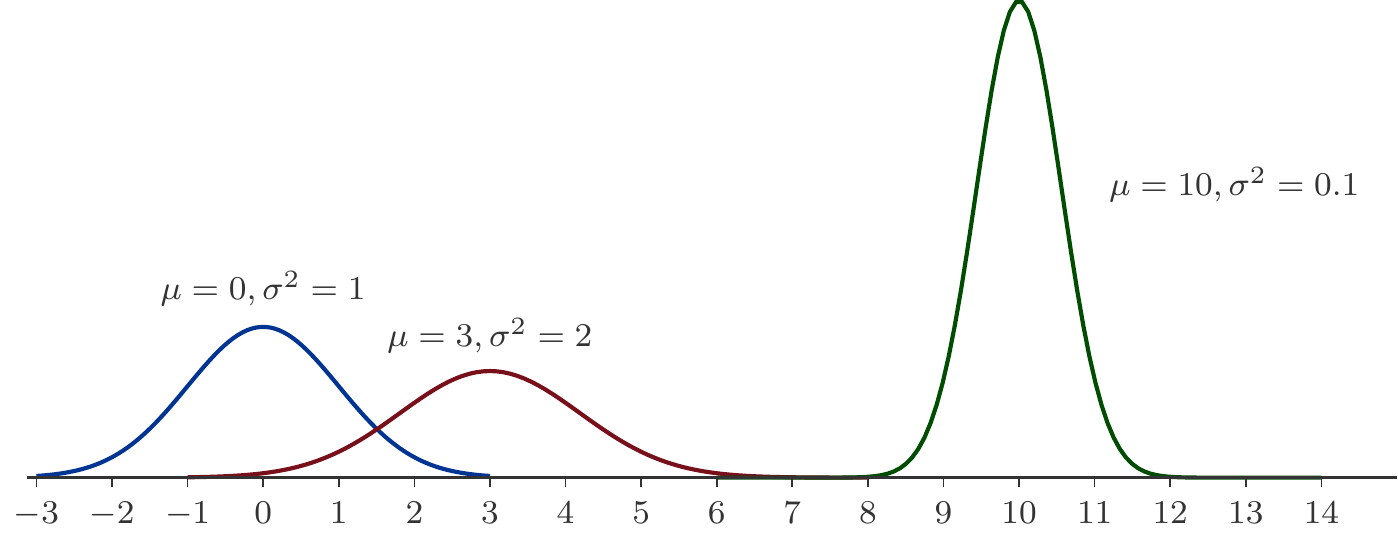

A random variable \(X\) is called logistically distributed if it has the following distribution function and density: \[ \begin{aligned} F(x)&=P(X\le x)=\frac{1}{1+e^{-(x-\mu)/s}},\qquad x\in\mathbb R\\[5pt] f(x)&=\frac{e^{-(x-\mu)/s}}{s\left(1+e^{-(x-\mu)/s}\right)^2}\,.\ \end{aligned} \tag{5.14}\] The parameter \(\mu\) is (see Section 5.3.1) and indicates the location of the maximum of the density \(f(x)\), \(s\) is a scale parameter. The applications of the Logistic Distribution are diverse, ranging from statistical data analysis through technical reliability to financial mathematics. For instance, there are sound (statistical) reasons to use the Logistic Distribution for modeling returns of financial assets.

Exercise 5.27 (Financial Mathematics) The annual return \(X\) of a security is logistically distributed with \[ \begin{gathered} P(X\le x)=\frac{1}{1+e^{-(x-0.1)/0.022}}. \end{gathered} \] At the beginning of a year, 2000 Units of currency (GE) were invested in the security.

What is the probability that the profit at the end of the year exceeds 300 units of currency (GE)?

What is the probability that the return is negative, thus resulting in a loss of capital?

Solution: (a) Since the profit \(G=2000X\), we have: \[ \begin{gathered} G>300\Leftrightarrow 2000X>300 \implies X>\frac{300}{2000}=0.15\,. \end{gathered} \] Therefore: \[ \begin{aligned} P(X>0.15) & = 1-P(X\le 0.15) \\ & = 1-\frac{1}{1+e^{-(0.15-0.1)/0.022}}=0.093407\,. \end{aligned} \] The probability of this occurring is thus approximately 9.3%.

(b) We are looking for \(P(X\le 0)\): \[ \begin{gathered} P(X\le 0)=\frac{1}{1+e^{-(0-0.1)/0.022}}=0.010504. \end{gathered} \] This undesirable scenario therefore occurs with a probability of about 1%. □

5.2 Conditional Probabilities

5.2.1 Fourfold Tables

Let \(A\) and \(B\) be two events associated with a random experiment. We can also imagine these as two subsets within a finite population.

We conduct the random experiment (for example, drawing an element from the population) and record which of the two events occur. We compile the possible combinations of events in a table: \[ \begin{gathered} \begin{array}{c|cc} & B & B' \\ \hline A & A\cap B & A\cap B' \\ A' & A'\cap B & A'\cap B'\\ \end{array} \end{gathered} \] Here, \(A'\) denotes the complementary event of \(A\), as usual.

If we populate this table with the respective probabilities, we obtain a fourfold table or contingency table. We can add the probabilities of the individual events at the margins of the table, which can be calculated as row or column totals according to the addition rule. \[ \begin{gathered} \begin{array}{c|cc|c} & B & B' &\\ \hline A & P(A\cap B) & P(A\cap B') & P(A) \\ A' & P(A'\cap B) & P(A'\cap B') & P(A')\\ \hline & P(B) & P(B') & 1 \end{array} \end{gathered} \] The probabilities that come out as row and column totals, namely \(P(A), P(A'), P(B)\) and \(P(B')\), are called total probabilities. Thus, \[ \begin{gathered} P(A)=P(A\cap B) + P(A\cap B') \end{gathered} \] is the total probability of the event \(A\). This means the probability of \(A\) occurring, regardless of whether it occurred with \(B\) or its complement. Obviously, the fundamental addition rule (5.2) is behind this, because the events \(\{A\cap B\}\) and \(\{A\cap B'\}\) are certainly mutually exclusive: \[ \begin{gathered} (A\cap B)\cap (A\cap B')=A\cap B\cap B'=\varnothing, \end{gathered} \] since \(B\cap B'=\varnothing\). The total probabilities for \(A', B\) and \(B'\) are calculated analogously.

To completely fill out a fourfold table, one only needs three independent pieces of information.

ELISA (Enzyme-linked Immunosorbent Assay) is a method available since the mid-1980s for detecting antibodies against the HIV virus in human blood. It is a relatively low-cost test that is used to screen blood banks, but also to test groups of people, such as military recruits, for HIV. ELISA is a screening test, meaning it is primarily used to identify all people in a group, or their blood donations, who may be infected with HIV.

For this example, we define the following two events: \[ \begin{aligned} A &= \text{\{a person is infected with the HIV virus}\\ &\phantom{=\{} \text{and has formed antibodies against the virus\}},\\[5pt] B &=\text{\{ELISA yields a positive test result\}}. \end{aligned} \] In a large-scale study (> 50000 participants), the following probabilities were estimated: \[ \begin{gathered} \begin{array}{l|cc|r} & B & B' &\\ \hline A & 0.0038 & 0.0002 & 0.0040\\ A'& 0.0301 & 0.9659 & 0.9960\\ \hline & 0.0339 & 0.9661 & 1.0000 \end{array} \end{gathered} \] What do these data tell us?

The proportion of HIV-infected people in the total population, the prevalence of HIV, is \(P(A)=0.004\), or 0.4%.

The proportion of participants who tested positive is \(P(B)=0.0339\).

With a probability of \(P(A\cap B')=0.0002\), a participant was infected with HIV and had a negative test result.

With probability \(P(A'\cap B)=0.0301\), a participant was not infected and yet had a positive test result.

These are insights that can be directly read from the contingency table.

But there is more information contained within this table.

Definition 5.29 (Conditional Probability) Let \(A\) and \(B\) be events with \(P(B)>0\), then \[ \begin{gathered} P(A|B)=\frac{P(A\cap B)}{P(B)} \end{gathered} \tag{5.15}\] is the conditional probability of the event \(A\) given the condition \(B\).

This definition answers the question: Among how many cases in which event \(B\) occurs, does the event \(A\) also occur?

The formula (5.15) is easy to understand when interpreted as a statement about proportions in finite populations: The conditional probability is identical to the proportion of \(A\) in the entirety of \(B\).

We continue with Example 5.28.

We first calculate \(P(A|B)\). This is the probability that someone who tested positive using ELISA (event \(B\)) is actually a carrier of the HIV virus (event \(A\)). With (5.15) we obtain: \[ \begin{gathered} P(A|B) = \frac{P(A \cap B)}{P(B)} = \frac{0.0038}{0.0339} = 0.1121\,. \end{gathered} \] This is interesting: someone who tested positive is actually infected with the HIV virus with a probability of only about 11%.

Now we calculate \(P(B|A)\), which is the proportion of those who tested positive (event \(B\)) among the HIV-infected (event \(A\)): \[ \begin{gathered} P(B|A) = \frac{P(A \cap B)}{P(A)} = \frac{0.0038}{0.0040} = 0.95\,. \end{gathered} \] This probability is called sensitivity of the test in a medical context. Naturally, one would like this probability to be as high as possible, because this is the probability that a person infected with HIV will actually be detected by ELISA.

Another interesting value is \(P(B'|A')\): \[ \begin{gathered} P(B'|A') = \frac{P(A' \cap B')}{P(A')} = \frac{0.9659}{0.9960} = 0.9698\,. \end{gathered} \] This is the probability that someone who is not HIV infected will have a negative test result, the specificity of ELISA. Here too, it is clear that one would like to achieve the highest possible values for this probability. The specificity of ELISA is about 97% here.

And finally \(P(A'|B')\). How certain can someone who tested negative be that they are not infected with HIV? \[ \begin{gathered} P(A'|B') = \frac{P(A' \cap B')}{P(B')} = \frac{0.9659}{0.9661} = 0.9998\,. \end{gathered} \] This value is reassuring.

From the definition of conditional probability (Definition 5.29), an important formula follows. It allows us to express the probability that two events occur together, using conditional probability:

Theorem 5.31 (Product Rule) The product rule applies: \[ \begin{gathered} P(A \cap B) = P(A|B)P(B). \end{gathered} \tag{5.16}\] This formula is also called the multiplication theorem.

In some problems, the conditional probabilities are known, but other probabilities are missing. In such cases, the missing probabilities must be calculated from the conditional probabilities. For this purpose, we use the product rule (5.16).

Exercise 5.32 (The typical women’s car is small and pink) In a study conducted in Germany in 2013, behavioral differences between men and women when purchasing a new car were examined. In the year 2013, 24% of the new cars were purchased by women, and 76% by men. Company cars were not included in the survey.

The focus of the investigation was, among other things, the widespread prejudice that women prefer small cars. For this purpose, the cars were divided into small cars \(K\) and non-small cars \(K'\) (sedans, station wagons, SUVs, etc.).

It was found that a full 10% of men could see themselves purchasing a small car, while it was 28% for women.

What percentage of the sold cars are small cars?

What percentage of the small car buyers are male?

Solution: First, we define the events of interest: \[ \begin{aligned} K & =\{\text{a compact car is purchased}\},\\ F & =\{\text{buyer is female}\},\\ M & =\{\text{buyer is male}\}. \end{aligned} \] The information tells us that 10% of compact car buyers are male, while the percentage for women is 28%. Therefore, we know two conditional probabilities: \[ \begin{gathered} P(K|M)=0.1,\quad P(K|F)=0.28\,. \end{gathered} \] Moreover, we know: \[ \begin{gathered} P(F)=0.24,\quad P(M)=1-P(F)=0.76. \end{gathered} \] Using the product formula (5.16), we can now calculate: \[ \begin{aligned} P(K\cap M)&= P(K|M)P(M)=0.1\cdot 0.76=0.076,\\ P(K\cap F)&= P(K|F)P(F)=0.28\cdot 0.24=0.0672\,. \end{aligned} \] With this, we have the groundwork for a contingency table: \[ \begin{gathered} \begin{array}{l|cc|c} &M & F\\ \hline K & 0.0760 & 0.0672 &\\ K'& & &\\ \hline & 0.7600 & 0.2400 & 1.0000 \end{array} \end{gathered} \] The missing values can easily be found by completing the rows and columns, so that we finally have the following complete contingency table: \[ \begin{gathered} \begin{array}{l|cc|c} &M & F\\ \hline K & 0.0760 & 0.0672 & 0.1432\\ K'& 0.6840 & 0.1728 & 0.8568\\ \hline & 0.7600 & 0.2400 & 1.0000 \end{array} \end{gathered} \] Now we can answer the posed questions.

The market share of compact cars is 14.3%, because \(P(K)=0.1432\).

The proportion of male buyers among compact car buyers is: \[ \begin{gathered} P(M|K)=\frac{P(M\cap K)}{P(K)}=\frac{0.0760}{0.1432}=0.5307\,. \end{gathered} \] Indeed, 53% of compact cars are bought by men! As for how many of them are pink, the data does not tell us. □

5.2.2 Independent Events

It is possible for two events \(A\) and \(B\) to satisfy the relationship \[ \begin{gathered} P(A|B)>P(A) \end{gathered} \] In this case, one could say that the event \(B\) favors the occurrence of \(A\). However, this formulation can be misleading as it suggests a causal effect of \(B\) on \(A\). In reality, the inequality is completely symmetrical in \(A\) and \(B\), as can be seen from \[ \begin{gathered} P(A|B)>P(A) \Leftrightarrow P(A\cap B)>P(A)P(B) \Leftrightarrow P(B|A)>P(B) \end{gathered} \] It is therefore more accurate to say that in this case the two events \(A\) and \(B\) favor each other or are positively coupled.

Similarly, in the case of \[ \begin{gathered} P(A|B)<P(A) \Leftrightarrow P(A\cap B)<P(A)P(B) \Leftrightarrow P(B|A)<P(B), \end{gathered} \] it is said that the two events hinder each other or are negatively coupled.

Exercise 5.33 (Accident Statistics) Out of 1000 traffic accidents, 280 had a fatal outcome (event \(A\)) and 100 occurred at a speed of over 150 km/h (event \(B\)). 20 accidents were non-fatal and occurred at speeds above 150 km/h.

What is the probability that a high-speed accident is fatal?

What is the probability that a fatal accident occurred at high speed?

Assess the coupling of events \(A\) and \(B\). Try to interpret the coupling causally.

Solution: The absolute frequencies are: \[ \begin{gathered} \begin{array}{c|cc|c} & B & B' &\\ \hline A & & & 280\\ A'&20 & & \\ \hline & 100 & & 1000 \end{array} \quad\Rightarrow\quad \begin{array}{c|cc|c} & B & B' &\\ \hline A & 80&200 & 280\\ A'& 20 &700& 720 \\ \hline & 100 &900 &1000 \end{array} \end{gathered} \] The estimated probabilities are then \[ \begin{gathered} \begin{array}{c|cc|c} & B & B' &\\ \hline A & 0.08& 0.20 & 0.28\\ A'&0.02 & 0.70& 0.72 \\ \hline & 0.10 & 0.90 &1.00 \end{array} \end{gathered} \] (a) The proportion of fatal traffic accidents to high-speed accidents is: \[ \begin{gathered} P(A|B)=\dfrac{P(A\cap B)}{P(B)}=\dfrac{0.08}{0.1}=0.8\,. \end{gathered} \] (b) The proportion of high-speed accidents to traffic accidents with fatalities is: \[ \begin{gathered} P(B|A)=\dfrac{P(A\cap B)}{P(A)}=\dfrac{0.08}{0.28}=0.2857\,. \end{gathered} \] (c) Since \(P(A|B)>P(A)=0.28\) and \(P(B|A)>P(B)=0.1\), there is a positive coupling. The two events favor each other.

These results suggest that high speed might be a cause for the fatal outcome of an accident. However, this conclusion is not compelling. It could also be that certain character traits of the driver cause both the high speed and the fatality of the accident. □

It is important to always remember that coupling of two events does not have to be an indication of a causal relationship between the events. It is very common for stratification of the population to be the cause of a spurious coupling of events.

No coupling occurs when \(P(A|B)=P(A)\) or \(P(B|A)=P(B)\). In this case, the two events are called independent. This has an important consequence: \[ \begin{aligned} P(A|B)=P(A) & \implies \frac{P(A \cap B)}{P(B)}=P(A) \\ & \implies P(A \cap B)=P(A)P(B). \end{aligned} \]

Theorem 5.34 (Stochastic Independence) Two events \(A\) and \(B\) are stochastically independent if \[ \begin{gathered} P(A\cap B)=P(A)P(B). \end{gathered} \tag{5.17}\] Otherwise, they are stochastically dependent or coupled.

When dealing with more than two events \(A_1,A_2,\ldots,A_n\), independence means that for all selections \(i_1<i_2<\ldots< i_k\) the equation \[ \begin{gathered} P(A_{i_1}\cap A_{i_2}\cap\ldots\cap A_{i_k})= P(A_{i_1})P(A_{i_2})\cdots P(A_{i_k}) \end{gathered} \tag{5.18}\] is true.

There are numerous cases of application where one knows in advance that certain events are independent. This information can then be used to determine probabilities. Indeed, many problems are significantly simplified by assuming independence.

Exercise 5.35 (Process Engineering) A technical system consists of three parts, which can fail independently of each other. The failure probabilities of the individual parts are 0.2, 0.3, and 0.1. Let \(X\) denote the number of failing parts. Determine the probability function of the random variable \(X\), i.e. \[ \begin{gathered} P(X=0),\quad P(X=1), \quad P(X=2),\quad P(X=3). \end{gathered} \]

Solution: Let \(A,\,B,\, C\) denote the events that each of the three parts fails, respectively. Given are the probabilities \(P(A)=0.2\), \(P(B)=0.3\) and \(P(C)=0.1\).

Taking into account the independence of the events \(A,B,C\) and using the addition rule, we obtain: \[ \begin{gathered} \small \begin{array}{l l } P(X=0) & = P(A'\cap B'\cap C')=P(A')P(B')P(C')\\[4pt] & = 0.8 \cdot 0.7 \cdot 0.9 = 0.504,\\[7pt] P(X=1) & = P(A\cap B' \cap C')+ P(A'\cap B \cap C')+ P(A'\cap B' \cap C)\\[4pt] & = P(A)P(B')P(C')+P(A')P(B)P(C')+P(A')P(B')P(C)\\[4pt] & =0.2\cdot 0.7 \cdot 0.9 + 0.8\cdot 0.3 \cdot 0.9 + 0.8\cdot 0.7\cdot 0.1 = 0.398,\\[7pt] P(X=2) & = P(A\cap B\cap C')+ P(A\cap B'\cap C)+ P(A'\cap B \cap C)\\[4pt] & = P(A)P(B)P(C')+P(A)P(B')P(C)+P(A')P(B)P(C)\\[4pt] & = 0.2\cdot 0.3 \cdot 0.9 + 0.2 \cdot 0.7 \cdot 0.1 + 0.8 \cdot 0.3 \cdot 0.1 =0.092,\\[7pt] P(X=3) & = P(A \cap B\cap C)=P(A)P(B)P(C)\\[4pt] &= 0.2 \cdot 0.3 \cdot 0.1 = 0.006\,. \end{array} \end{gathered} \]

Thus, the probability function of the random variable \(X\) in tabular form is: \[ \begin{gathered} \begin{array}{c|cccc} k & 0 & 1 & 2 & 3\\ \hline P(X=k) & 0.504 & 0.398 & 0.092 & 0.006 \end{array} \end{gathered} \] □

The concept of independence can also be applied to random variables, because statements about random variables such as \(\{X>b\}\) or \(\{a<X≤b\}\) are random events.

Definition 5.36 Two random variables \(X\) and \(Y\) are called stochastically independent if statements about the random variables are stochastically independent events.

Remark 5.37 If \(X\) and \(Y\) are independent, then the events \(\{X\le a\}\) and \(\{Y\le b\}\) are also independent. Therefore, \[ \begin{gathered} P(\{X\le a\}\cap \{Y\le b\})=P(X\le a)\cdot P(Y\le b). \end{gathered} \] Since expressions like the one on the left side of this equation occur frequently in applications (e.g., in statistics), a simplified comma notation has become customary: \[ \begin{gathered} P(\{X\le a\}\cap \{Y\le b\})=P(X\le a, Y\le b). \end{gathered} \]

Exercise 5.38 (A Call Center) A company operates a call center to efficiently process customer inquiries. It is known that the duration \(T\) (in minutes) of a customer call is a continuous random variable with distribution function \(P(T\le t)=1-e^{-t/10}\).

Three customers call at the same time and are immediately connected to an agent. Their calls last \(T_1, T_2\), and \(T_3\) minutes, where these random variables are stochastically independent.

What is the probability that the longest of the three calls lasts longer than 20 minutes?

Solution: Let \(M=\max(T_1,T_2,T_3)\), where \(T_1,T_2\), and \(T_3\) are independent. We first consider the event \(\{M\le h\}\). This event can only occur if every \(T_i\le h\): \[ \begin{gathered} \{M\le h\} \Leftrightarrow \{T_1\le h\}\cap \{T_2\le h\} \cap \{T_3\le h\}, \end{gathered} \] because if just one \(T_i>h\), then \(M\) could no longer be \(\le h\). Equivalent events have the same probability, therefore: \[ \begin{aligned} P(M\le h)&=P(T_1\le h,T_2\le h,T_3\le h)\\[4pt] &=P(T_1\le h)\cdot P(T_2\le h)\cdot P(T_3\le h)\quad\text{(Independence)}\\[4pt] &=(1-e^{-h/10})^3. \end{aligned} \] We are looking for \[ \begin{aligned} P(M>20)&=1-P(M\le 20)=1-(1-e^{-20/10})^3=0.3535. \end{aligned} \] This is a relatively high probability, because one conversation, say the first one, lasting longer than 20 minutes is only: \[ \begin{gathered} P(T_1>20)=e^{-20/10}=0.1353. \end{gathered} \] □

5.3 Expected Value and Variance

5.3.1 The Concept of Expected Value

Let \(X\) be a random variable. If the underlying random experiment is repeated frequently and the resulting values of the random variable \(X\) are collected, then a data list \(x_1,x_2,\ldots\) is created. We can now calculate the averages \[ \begin{aligned} \bar{x}_1 &=\frac{x_1}{1},\;\bar{x}_2=\frac{x_1+x_2}{2},\;\bar{x}_3=\frac{x_1+x_2+x_3}{3}, \\ \bar{x}_4 &=\frac{x_1+x_2+x_3+x_4}{4}, \ldots \end{aligned} \tag{5.19}\] and study the progression of the averages with an increasing number of data. With many random variables, it is observed that these averages tend to approach a fixed value over time (provided that the repetitions are independent and occur under identical conditions). This limit is the long-term average of the random values of the random variable and is referred to as the expected value of the random variable.

Remark 5.39 (Existence of Expected Values) It is by no means the case that for every random variable the averages of the data lists converge. If that is not the case, then the concept of the expected value does not make sense for such a random variable. In that case, one says that the random variable does not possess an expected value. However, we will not consider such random variables in the following.

Our explanation of the term expected value is quite similar to our explanation of the concept of probability. This also results in a first fundamental relationship between probabilities and expected values.

Let \(A\) be an event, and let \(X_A\) be the following random variable: \[ \begin{gathered} X_A(\omega)=\left\{\begin{array}{ll} 1 &\text{if $\omega\in A$},\\ 0 &\text{if $\omega\not\in A$} \end{array}\right. \end{gathered} \] The random variable \(X_A\) indicates the occurrence of the event \(A\). That’s why it is called the indicator variable of the event \(A\).

To determine the expected value of an indicator variable \(X_A\), one must look at the averages of data lists that are generated by an indicator variable. It is easy to see that these averages are identical to the relative frequencies with which the event \(A\) occurs in a series of repetitions of the random experiment. Therefore, the limit of the averages of \(X_A\) must coincide with the limit of the relative frequencies of \(A\). This means \(E(X_A)=P(A)\).

Another fundamental property of the expected value of random variables is a rule called linearity.

If \(X\) and \(Y\) are two random variables and \(a,b\in\mathbb R\) are any real numbers. Then we can form another random variable \(Z=aX+bY\). It’s obvious that for the averages \(\overline{x}, \overline{y}\), and \(\overline{z}\) of data lists of these random variables the equation \(\overline{z}=a\overline{x}+b\overline{y}\) holds. This implies \(E(Z)=aE(X)+bE(Y)\).

In a very similar way, it is reasoned that a random variable whose values are nonnegative must also have a nonnegative expected value: \(X\ge 0 \implies E(X)\ge 0\).

To summarize:

Theorem 5.40 The expected value of random variables has the following properties:

(a) The expected value of an indicator variable is identical to the probability of the underlying event: \[ \begin{gathered} E(X_A)=P(A). \end{gathered} \]

(b) The expected value of a linear combination of random variables is identical to the corresponding linear combination of the expected values: \[ \begin{gathered} E(aX+bY)=aE(X)+bE(Y). \end{gathered} \]

(c) The expected value of a nonnegative random variable is nonnegative: \[ \begin{gathered} X\ge 0 \implies E(X)\ge 0. \end{gathered} \]

Exercise 5.41 (Cost model) A manufacturing company operates with monthly fixed costs of 1000 CU and variable costs of 5 CU per piece. The monthly production is a random variable with an expected value of 300 pieces. Find the expected value of the monthly costs.

Solution: Let \(X\) represent the monthly production and \(Y\) the monthly costs, so \(Y=1000+5X\). Consequently, we have \[ \begin{gathered} E(Y)=E(1000+5X)=1000+5E(X)=1000+5\cdot 300=2500. \end{gathered} \] □

5.3.2 Calculation of Expectation Values

In the following examples, we calculate expectation values of random variables that can take on finitely many different values.

Let \(X\) be, for example, a random variable with two possible values \(a\) and \(b\) and let \(A=\{X=a\}\) and \(B=\{X=b\}\). Then the random variable \(X\) can be represented as a linear combination of indicator variables: \[ \begin{gathered} X=aX_A+bX_B. \end{gathered} \] It is very important to fully understand the validity of this equation: If \(\{X=a\}\) is true, then \(X_A=1\) and \(X_B=0\). Accordingly, the linear combination on the right-hand side has the value \(a\), and the equation is correct. The same applies if \(\{X=b\}\) is true.

From the validity of the equation \(X=aX_A+bX_B\), it follows from the rules for calculating expectation values that \[ \begin{gathered} E(X)=aE(X_A)+bE(X_B)=aP(A)+bP(B). \end{gathered} \] This observation can be generalized to random variables that can take on finitely many values.

Theorem 5.42 Let \(X\) be a random variable that takes on values \(a_1, a_2,\ldots,a_n\) with probabilities \(P(X=a_k)=p_k\). Then \(E(X)\) is: \[ \begin{gathered} E(X)=a_1p_1+a_2p_2+\cdots+a_np_n. \end{gathered} \tag{5.20}\] In other words: \(E(X)\) is a weighted average of the values \(a_1,\ldots,a_n\) of \(X\), weighted with the probabilities \(p_1,\ldots,p_n\).

Exercise 5.43 (Dice Roll) (a) What is the expected number of dots when rolling a die?

(b) Find the expected value of the sum of the dots when rolling a die twice.

Solution: The number of dots is a random variable \(X\) with values \(1,2,\ldots,6\), each with a probability of \(1/6\).

(a) We apply (5.20): \[ \begin{aligned} E(X)&=1\cdot P(X=1)+2\cdot P(X=2)+\cdots+6\cdot P(X=6)\\ &=\frac{1}{6}(1+2+\cdots+6)=3.5\,. \end{aligned} \] This is a formal confirmation of the experiment from Figure 5.9.

(b) Let \(X\) be the number of dots on the first roll and \(Y\) the number of dots on the second roll. Then according to (Theorem 5.40 (b)): \[ \begin{gathered} E(X+Y)=E(X)+E(Y)=3.5+3.5=7 \end{gathered} \] □

A gambling game is called fair if the expected value of the winnings \(G\) matches the stake.

Exercise 5.44 (Lottery) In the 6 out of 45 lottery, the stake for a bet is 10 currency units. How high must the victory for a main prize be in order for it to be a fair game of chance?

Solution: Let \(A\) be the event of scoring a main prize and \(G\) the achieved gain. For it to be a fair game, the equation \[ \begin{aligned} Stake &=E(G)=E(Winning Amount\cdot X_A)=\\ &= Winning Amount\cdot E(X_A)= Winning Amount\cdot P(A) \end{aligned} \] must hold. We applied the theorem (Theorem 5.40 (a) and (b)). It follows: \[ \begin{gathered} \frac{Stake}{P(A)}=Winning Amount \end{gathered} \] We have already calculated the probability \(P(A)\) for the main prize in Exercise 5.12. Therefore, for a fair bet, the winning amount must be: \[ \begin{gathered} \frac{10}{P(A)}=10\cdot\frac{45\cdot 44\cdots 43\cdot 40}{ 6\cdot 5\cdots 2\cdot 1}=81,450,600. \end{gathered} \]

Exercise 5.45 (Financial Mathematics) A security with an initial value of \(300\) increases by 5 percent with a probability of 0.2 within a year, or it decreases by 5 percent. Find the expected value of the value of this investment after one year.

Solution: Let \(W\) denote the value of the security after one year, and let \(A\) be the event that the security increases by 5 percent. We use indicators again. Then, \[ \begin{gathered} W=300\cdot 1.05 \cdot X_A+ 300\cdot 0.95 \cdot X_{A'}=315 X_A+285 X_{A'}. \end{gathered} \] The following results from the theorem Theorem 5.40: \[ \begin{aligned} E(W)&= 315 E(X_A)+285 E(X_{A'})=315 P(A)+285P(A')\\ &=315\cdot 0.2+285\cdot 0.8= 291. \end{aligned} \]

Expectation values of continuous random variables

These are defined by integrals. Specifically, if the continuous random variable \(X\) has the density \(f(x)\), then its expectation value is: \[ \begin{gathered} E(X)=\int_{-\infty}^\infty xf(x)\,\mathrm{d}x. \end{gathered} \tag{5.21}\] The calculation of these integrals typically requires advanced calculus methods, which is why we will not go into detail here. For completeness, we mention two special cases that we have already encountered in applications (see Example 5.24 and Example 5.26):

Theorem 5.46 If \(T\) is exponentially distributed with distribution function \(F(t)=1-e^{-\lambda t}, t\ge 0\), then \(E(T)=1/\lambda\).

If \(X\) is logistically distributed with distribution function \(F(x)=\dfrac{1}{1+e^{-(x-\mu)/s}}, X\in\mathbb R\), then \(E(X)=\mu\).

We now take a look at an interesting problem from the field of actuarial science.

A survival life insurance consists of a promise to pay out a capital \(K\) after a period of \(t\) years if the policyholder is still alive at that point. If \(A\) denotes the event that the policyholder survives the \(t\) year waiting period, then the payout is obviously \(K\cdot X_A\). The present value of the payout at the time the insurance is taken out is \(B=Kd^tX_A\), where \(d\) denotes the discount factor.

The risk of insurance is understood to be the expected value \(R=E(B)\) of the present value of the payout. If \(q\) is the mortality rate of the insured during a year, then (oversimplified) \(P(A)=(1-q)^t\) and therefore the risk of the insurance is \[ \begin{gathered} R=E(B)=Kd^t(1-q)^t. \end{gathered} \] The insurance principle states, that the premium of an insurance policy, i.e., the price paid by the policyholder, must match the risk of the insurance. In practice, an insurance premium also includes administrative fees and is therefore larger than a pure risk premium.

Exercise 5.47 (Insurance) Calculate the risk premium for a 40-year-old man and a 40-year-old woman who want to take out a survival life insurance for a capital of 100 000 CU, to be paid out after 10 years. The legally binding interest rate for insurance calculations is 3%.

The mortality rates are 0.003 for 40-year-old men \(q_m=0.003\) and 0.0015 for 40-year-old women \(q_w=0.0015\).

How high is the yield for a surviving policyholder from such an insurance contract?

Solution: For men, the risk premium is \[ \begin{gathered} R_m=100000 \left( \frac{1}{1.03}\right)^{10} (1-0.003)^{10}=72207. \end{gathered} \] The annual yield \(r\) results from \(K_{10}=K_0(1+r)^{10}=R_m(1+r)^{10}\) and is \[ \begin{gathered} \left(\frac{100000}{72207}\right)^{1/10}-1=0.0331. \end{gathered} \] For women, the risk premium is \[ \begin{gathered} R_m=100000 \left( \frac{1}{1.03}\right)^{10} (1-0.0015)^{10}=73301. \end{gathered} \] The yield is \[ \begin{gathered} \left(\frac{100000}{73301}\right)^{1/10}-1=0.0315. \end{gathered} \] □

5.3.3 The Multiplication Rule for Expected Values

The expected value has a remarkable multiplication property when applied to products of independent random variables.

The independence of events can be formulated mathematically as a multiplication property of probabilities. Something similar applies to random variables and their expected value.

This can be seen directly with indicator variables. If \(A\) and \(B\) are two events, then obviously \(X_{A\cap B}=X_AX_B\) because the event \(A\cap B\) only occurs if both events \(A\) and \(B\) happen at the same time. Then \(X_A=1\) and \(X_B=1\) and therefore \(X_AX_B=1\). This leads to \(E(X_AX_B)=E(X_{A\cap B})=P(A\cap B)\). Thus, we get \[ \begin{gathered} E(X_AX_B)=E(X_A)E(X_B)\quad \Leftrightarrow\quad P(A\cap B)=P(A)P(B). \end{gathered} \] So, the two events \(A\) and \(B\) are independent if and only if the formula \[ \begin{gathered} E(X_AX_B)=E(X_A)E(X_B). \end{gathered} \] is correct.

In general, the following statement holds.

Theorem 5.48 Let \(X\) and \(Y\) be two random variables that possess an expected value. If \(X\) and \(Y\) are independent, then it holds that \[ \begin{gathered} E(XY)=E(X)E(Y). \end{gathered} \tag{5.22}\]

5.3.4 The Variance of Random Variables

Let \(X\) be a random variable with the expected value \(E(X)=\mu\).

The expected value of a random variable provides information about the long-term average values of the random variable. However, the expected value does not tell us about how much the values of the random variable scatter around $. In order to get an idea of the spread of the values of a random variable, we are interested in the deviations \(X-\mu\) of the random variable from its expected value.

Definition 5.49 Let \(X\) be a random variable and \(\mu\) its expected value. The expected value of the random variable \((X-\mu)^2\) is called the variance of the random variable: \[ \begin{gathered} \sigma^2=V(X)=E (X-\mu)^2. \end{gathered} \] The square root \(\sigma=\sqrt{V(X)}\) is referred to as the standard deviation of \(X\).

A simplified formula is used for calculating variances, which reduces the computational effort.

Theorem 5.50 (Steiner’s Translation Theorem) The is a random variable \(X\) with the expected value \(E(X)=\mu\) and the variance \(V(X)\). Then it holds that \[ \begin{gathered} V(X)=E(X^2)-\mu^2. \end{gathered} \]

Justification: This follows directly from the Definition 5.49. When we perform the squaring: \[ \begin{aligned} V(X)&=E(X^2-2\mu X+\mu^2)=E(X^2)-2\mu E(X)+\mu^2\\[5pt] &=E(X^2)-2\mu^2+\mu^2=E(X^2)-\mu^2. \end{aligned} \] □

Exercise 5.51 Calculate the variance of the number on a die when it is thrown.

Solution: We already know \(E(X)=3.5\). Now we calculate \(E(X^2)\): \[ \begin{gathered} E(X^2)=\frac{1}{6}\left(1^2+2^2+3^2+4^2+5^2+6^2\right)=\frac{91}{6}=15.1667. \end{gathered} \] Then it follows (using Theorem 5.50) \[ \begin{gathered} V(X)=E(X^2)-\mu^2=\frac{91}{6}-\left(\frac{7}{2}\right)^2=\frac{35}{12}\simeq 2.9167. \end{gathered} \] □

In the next exercise, we revisit the process engineering problem from Exercise 5.35.

Exercise 5.52 A random variable \(X\) has the probability function: \[ \begin{gathered} \begin{array}{c|cccc} k & 0 & 1 & 2 & 3\\ \hline P(X=k) & 0.504 & 0.398 & 0.092 & 0.006 \end{array} \end{gathered} \] Calculate \(E(X)\) and \(V(X)\).

Solution: We first calculate \(\mu=E(X)\) and \(E(X^2)\): \[ \begin{aligned} \mu&=0\cdot 0.504+1\cdot 0.398+2\cdot 0.092+3\cdot 0.006=0.6,\\[4pt] E(X^2)&=0^2\cdot 0.504+1^2\cdot 0.398+2^2\cdot 0.092+3^2\cdot 0.006=0.82\,. \end{aligned} \] Following from that \[ \begin{gathered} V(X)=E(X^2)-\mu^2=0.82-0.6^2=0.46\,. \end{gathered} \] □

Remark 5.53 (Interpretation of Variance) As one can read from the definition of variance, the variance \(V(X)\) of a random variable \(X\) contains information about how much the random variable \(X\) can fluctuate around its expected value \(E(X)\). This information can be specified mathematically quite precisely.

Let \(\sigma=\sqrt{V(X)}\) denote the standard deviation of the random variable \(X\). One might then ask how large the deviations \(X-E(X)\) might be. This question can only be answered precisely if the distribution of \(X\) is known.

A probability distribution that surprisingly appears in many applications is the normal distribution (see Section 5.4). For normally distributed random variables, the following holds: \[ \begin{gathered} P(|X-E(X)|\le \sigma)\approx 0.66, \quad P(|X-E(X)|\le 2\sigma)\approx 0.95 \end{gathered} \] The standard deviation can thus be used to create rules of thumb which approximately indicate the fluctuation ranges of random variables: In the interval \((E(X)-\sigma,E(X)+\sigma)\) lie 66 percent of observed values of \(X\), etc.

However, the aforementioned rules are based on the characteristics of the normal distribution. In unfavorable cases, it may happen that the probabilities of the fluctuation intervals are significantly lower than indicated above.

We now turn to the rules for calculating variances. First, we examine how the variance changes when we subject a random variable to a linear transformation.

Theorem 5.54 Let \(X\) be a random variable with the variance \(V(X)\). Then it holds \[ \begin{gathered} V(aX+b)=a^2V(X) \quad \text{for $a,b \in \mathbb R$}. \end{gathered} \]

Justification: From the Definition 5.49 of variance it follows: \[ \begin{aligned} V(aX+b)&=E(aX+b-E(aX+b))^2=E(aX-aE(X))^2\\ &=a^2E(X-E(X))^2=a^2V(X). \end{aligned} \] Incidentally, it also follows from this that the variance of a constant is zero, that is \(V(b)=0\). □

Exercise 5.55 (Linear Cost Model) A manufacturing company operates with monthly fixed costs of 1000 monetary units (MU) and variable unit costs of 5 MU. The monthly production is a random variable with a standard deviation of 20 units. Find the variance and standard deviation of the monthly costs.

Solution: If \(X\) denotes the monthly production and \(Y\) the monthly costs, then it follows \(Y=1000+5X\). Consequently (since \(V(X)=20^2=400\)): \[ \begin{gathered} V(Y)=V(1000+5X)=25V(X)=25\cdot 400 =10\,000. \end{gathered} \] The standard deviation \(\sqrt{V(Y)}\) is 100. □

It is neither expected nor correct to say that the variance of a sum of random variables matches the sum of their individual variances. However, it is all the more remarkable that such an addition law for variances does hold for independent random variables.

Theorem 5.56 If \(X\) and \(Y\) are independent random variables, then it holds that \[ \begin{aligned} V(X+Y)&=V(X)+V(Y). \end{aligned} \]

Justification: We begin our calculation and once again use Definition 5.49: \[ \begin{gathered} \begin{array}{rcl} V(X+Y) & = & E(X+Y - E(X+Y))^2 \\ & = & E(X-E(X) +Y-E(Y))^2\\ & = & E(X-E(X))^2 +2E\left[(X-E(X))(Y-E(Y))\right]\\ & & +E(Y-E(Y))^2. \end{array} \end{gathered} \] But for the middle part, due to the multiplication property (5.22), we have \[ \begin{gathered} E\left[(X-E(X))(Y-E(Y))\right]=E(X-E(X))E(Y-E(Y))=0, \end{gathered} \] because \(E(X-E(X))=E(X)-E(E(X))=E(X)-E(X)=0\). □

Interestingly, the addition rule also applies when we consider differences of independent random variables, because \[ \begin{aligned} V(X-Y) & = V(X)+V\left((-1)Y\right)\\ & = V(X)+(-1)^2V(Y)=V(X)+V(Y). \end{aligned} \]

5.3.5 The Return of a Portfolio

The addition rule for variances has an important application in financial mathematics. It implies that in the formation of portfolios of securities, diversification leads to a reduction in risk.

The return \(R\) of a security refers to the relative increase in value during a period. If at the beginning the value of the security was \(V_0\) monetary units and at the end of the period the value is \(V_1\) monetary units, then the return achieved with this investment in one period is \[ \begin{gathered} R=\frac{V_1-V_0}{V_0}. \end{gathered} \] A portfolio is a decision about how available capital is invested in two or more securities. Assume we form a portfolio of three securities \(A, B\), and \(C\). At the beginning of the period, we decide to invest the sums \(A_0,\;B_0,\;C_0\) in these papers. If at the end of the period these investments have values of \(A_1, B_1\), and \(C_1\), then the joint return \(R\) of the portfolio formed in this way is the relative change in value of the invested sum: \[ \begin{aligned} R&=\dfrac{A_1+B_1+C_1-(A_0+B_0+C_0)}{A_0+B_0+C_0}. \end{aligned} \tag{5.23}\] We set: \[ \begin{gathered} R_A=\frac{A_1-A_0}{A_0},\quad R_B=\frac{B_1-B_0}{B_0},\quad R_C=\frac{C_1-C_0}{C_0}, \end{gathered} \] these are the returns of the three securities. We can now express the joint return \(R\) with the help of the returns \(R_A, R_B\), and \(R_C\): \[ \begin{gathered} \begin{array}{rcl} R & = & \displaystyle \frac{A_1-A_0}{A_0+B_0+C_0} + \frac{B_1-B_0}{A_0+B_0+C_0} + \frac{C_1-C_0}{A_0+B_0+C_0}\\[4pt] & = & \displaystyle \frac{A_0}{A_0+B_0+C_0} \cdot \frac{A_1-A_0}{A_0} + \frac{B_0}{A_0+B_0+C_0} \cdot \frac{B_1-B_0}{B_0}+\\[4pt] & & \displaystyle \frac{C_0}{A_0+B_0+C_0} \cdot \frac{C_1-C_0}{C_0}\\[4pt] & = & \displaystyle \frac{A_0}{A_0+B_0+C_0} \cdot R_A + \frac{B_0}{A_0+B_0+C_0} \cdot R_B +\\[4pt] & & \displaystyle \frac{C_0}{A_0+B_0+C_0} \cdot R_C. \end{array} \end{gathered} \] The joint return is therefore the weighted average of the individual returns, where the capital shares (percentages) of the individual securities are to be used as weights: \[ \begin{gathered} \alpha=\frac{A_0}{A_0+B_0+C_0},\quad \beta=\frac{B_0}{A_0+B_0+C_0},\quad \gamma=\frac{C_0}{A_0+B_0+C_0} \end{gathered} \] In other words, the joint return of our portfolio is: \[ \begin{gathered} R=\alpha R_A+\beta R_B+\gamma R_C,\quad\text{where }\alpha+\beta+\gamma=1. \end{gathered} \tag{5.24}\] It is precisely these capital shares \(\alpha, \beta\), and \(\gamma\) that define the portfolio.

But why should investors form portfolios at all? Wouldn’t it be more sensible to invest all the capital in the paper that yields the highest return?

The main reason for the formation of a portfolio is that for most investment forms, the possible returns are not deterministic sizes but random variables. And it is the variance of the return that is important, for it is a measure of the risk associated with a financial investment. The higher the variance of the return, the riskier it is to invest in a security.

We can illustrate this intuitively as follows: suppose we have the choice between two securities \(A\) and \(B\) with returns \(R_A\) and \(R_B\). Further suppose that the expected returns are the same, so \(E(R_A)=E(R_B)\), but for the variances it should hold: \[ \begin{gathered} V(R_A)=0,\quad V(R_B)>0. \end{gathered} \] In this case, we have good reasons to prefer security \(A\), because although the average yield is the same, the yield achieved with paper \(B\) is associated with some uncertainty, which is greater the larger \(V(R_B)\). In fact, investments with a variance of zero in returns are called risk-free. These are, therefore, securities where the return over a certain period is guaranteed.

We now calculate the expected value and variance of \(R\), given in (5.24). The expected return is due to the linearity of the expectation value (Theorem 5.40 (b)): \[ \begin{gathered} E(R)=\alpha E(R_A)+\beta E(R_B)+\gamma E(R_C). \end{gathered} \] If the returns \(R_A,R_B\), and \(R_C\) are independent random variables, then the variance—and thus a measure of the risk associated with the portfolio—is due to Theorem 5.54 and Theorem 5.56: \[ \begin{gathered} V(R)=\alpha^2V(R_A)+\beta^2V(R_B)+\gamma^2V(R_C). \end{gathered} \tag{5.25}\] This formula, which follows from the law of variances addition, has remarkable consequences. It explains why it is sensible to diversify.

The next example shows the risk-reducing effect very clearly.

Exercise 5.57 (Diversification) An investor forms a portfolio from three securities \(A, B\), and \(C\). They invest 50% of the available funds in security \(A\), 30% in security \(B\), and 20% in security \(C\). The returns of these securities are independent random variables with the expected values 0.08, 0.05, and 0.03 and the same standard deviation of 0.02. Calculate the expected value and standard deviation of the return of this portfolio.

Solution: The return of the portfolio is \(R=0.5\cdot R_A+0.3\cdot R_B+0.2\cdot R_C\).

From this we have: \[ \begin{gathered} E(R)=0.5\cdot 0.08+0.3\cdot 0.05+0.2\cdot 0.03= 0.0610\,. \end{gathered} \] and \[ \begin{aligned} V(R)&=0.5^2\cdot V(R_A)+0.3^2\cdot V(R_B)+0.2^2\cdot V(R_C)\\ &=\left[0.5^2+0.3^2+0.2^2\right]\cdot 0.02^2=0.000152\,. \end{aligned} \] The standard deviation of the return is therefore \(\sqrt{0.000152}=0.012329\). It is thus substantially lower than the standard deviation of the return of each of the three securities. This means that the investment risk was significantly reduced by forming the portfolio. □

Remark 5.58 Three remarks or questions regarding this task:

In this task and in deriving (5.25), we explicitly assumed that the returns of the securities are independent random variables. What if this assumption does not hold?

In the task, the capital shares defining the portfolio were predetermined. But couldn’t we make these into decision variables and thus ask: what would an optimal portfolio look like?

For the capital shares \(\alpha, \beta,\) and \(\gamma\), it is of course true that \(\alpha+\beta+\gamma=1\), but that does not mean that all three capital shares must be positive. Do negative capital shares make sense at all, and what do they mean?

These are very exciting questions that we will explore in detail in Chapter 9.

Exercise 5.59 An investor wants to form a portfolio from two securities, \(A\) and \(B\), whose returns are independent random variables. The standard deviations of the returns are known to them: \(\sigma(R_A)=0.7\) and \(\sigma(R_B)=0.8\). How should they choose the capital shares of the two securities in the portfolio so that the investment risk is minimized?

Solution: Let \(\alpha\) be the capital share of \(A\), then the share of \(B\) is \(1-\alpha\). The joint return is therefore \(R=\alpha R_A+(1-\alpha) R_B\). The measure of the risk is the variance of \(R\): \[ \begin{aligned} V(R)&=V(\alpha R_A+(1-\alpha)R_B)=\alpha^2V(R_A)+(1-\alpha)^2V(R_B)\to\min. \end{aligned} \]